In the first post I’ve laid out the tools and lab setup, so in this one I’m going to discuss some results.

Description of overall test methodology

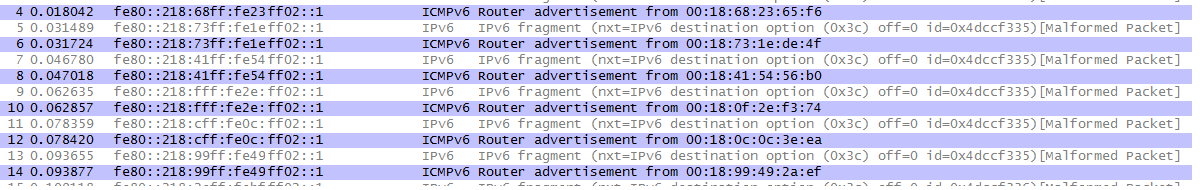

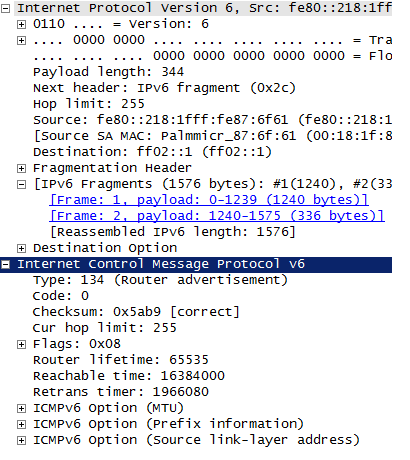

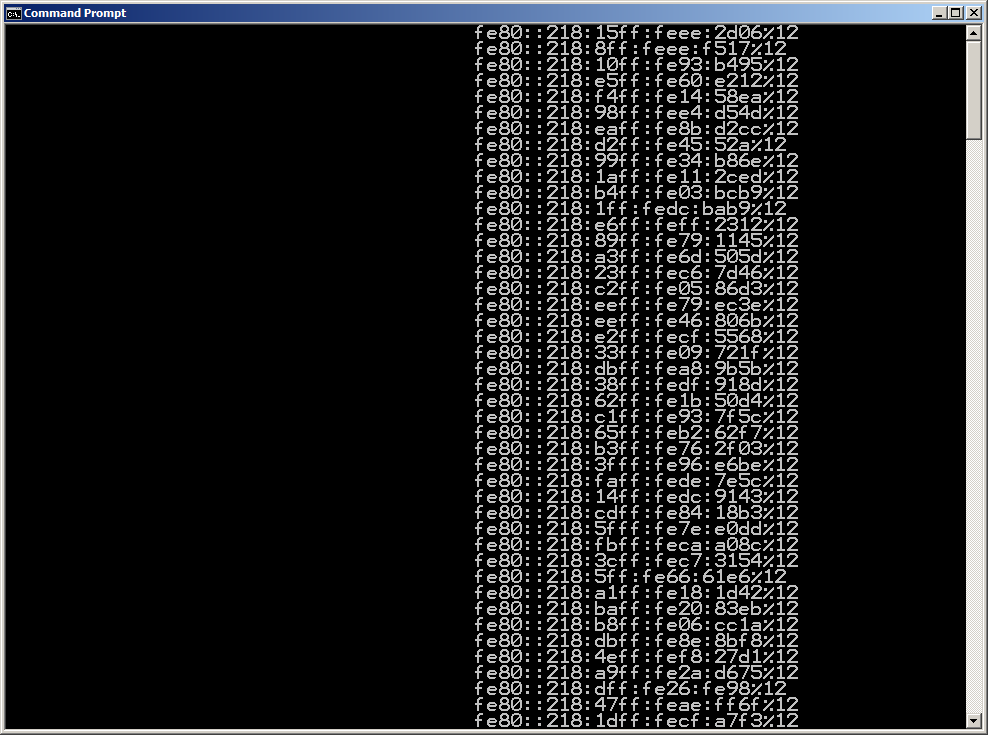

To evaluate the performance of the different setups used to analyze capture data, both tcpdump and pcap_extractor (see last post) were used. For the tests, five capture files were created using mergecap. Various sample traffic dumps were merged to five large files with different file sizes. All these files consisted of several capture files containing a variety of protocols (including iSCSI and FCoE packets). Capture files of ∼40, ∼80, ∼200, ∼500, and ∼800 GB size were created and were analyzed with both tools. For all tests the filtering expressions for tcpdump and pcap_extractor were configured to search for a specific source IP and a specific destination IP matching to iSCSI packets contained in the capture file. Additionally pcap_extractor was “instructed” to look for some search string (formatted like a credit card number).To address the performance bottleneck (again, see last post), that is the I/O throughput, two different setups of the testing environment (see above) were implemented, the first one going with a raid0 approach using four SSD hard drives, the second one with four individual SSD hard drives, each of them processing only a fourth of the analyzed capture file. Standard UNIX time command was invoked to measure the time of execution. Additionally the tools analyzing the data were started with the highest possible scheduling priority to ensure execution with the maximum of available resources. This is a sample command line invoking the test:

/usr/bin/time -hp /usr/bin/nice -n -19 ./pcap_test2 -i $i/in.pcap -o $i/out.pcap -f “ip src 192.168.1.207 and ip dst 192.168.1.208” -s “5486000000620012” > $i/out &

Results

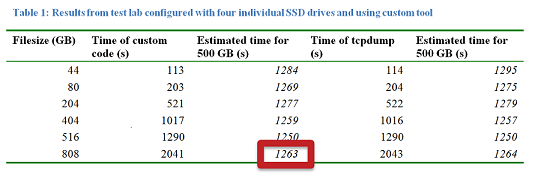

The most interesting results table is shown below:

Conclusions

So actually extracting a given search string from a 500 GB file could be done in about 21 minutes, employing readily available tools and using COTS hardware for about 3K EUR (as of March 2011). This means that an attacker disposing of (large) data sets resulting from previous eavesdropping attacks will most likely succeed in getting the exact data she’s going after. Furthermore the time needed scales in a lineary fashion with the file size, so that processing a 1 TB data volume presumably would have taken ∼42 minutes, a 2 TB file would have taken ∼84 minutes and so on. In addition, SSD prices are constantly declining, too.

Thus it could be shown that the perception that the sheer volume of data gained from eavesdropping attacks on high speed links might prohibit an attacker from analyzing this data is, well, simply not correct ;-).

Risk Assessment & Mitigating Controls

Several factors come into play when trying to assess the actual risk of this type of attack. Let’s put it like this: once an attacker disposes either of physical access to a fibre at some point or is able to get into the transport path by means of certain network based attacks – which are going to be covered in another, future post – collecting and analyzing the data is an easy task. If you have sufficient reasons for trusting the party actually implementing the connection (e.g. a carrier offering Metro Ethernet services) and “the overall circumstances” you might rely on the isolation properties provided by the service and topology. In case you either don’t have sufficient reasons to trust (some discussion on approaches to “evaluate trustworthiness” can be found here or here) or in highly regulated environments, using encryption technologies on layer 2 (like these or these) might be a safer approach.

In the next post we’ll discuss the cloud based test setup, together with its results. Stay tuned &

have a great day,

Enno

Continue reading