Hello again.

Andrei Costin (at http://firmware.re project) is here, and this is the second post from a series of guest postings courtesy of ERNW (thanks Niki and Enno!).

Few days ago, the first CCS’16 summarization post went online: https://insinuator.net/2016/11/introduction-ccs16-day-1-24th-october-2016/

It summarized five presentations of the 6th Annual Workshop on Security and Privacy in Smartphones (SPSM’16). In short, it contained presentations on: over-the-top and phone number abuse, smartphone fingerprinting, apps privacy increase and protection/security, and apps privacy ranking.

I do hope you enjoyed it, or if you did not have a chance to read, please do check it out at the link above, or get the whitepapers here (behind pay-wall):

http://dl.acm.org/citation.cfm?id=2994459&picked=prox&CFID=868032114&CFTOKEN=19736804

Without further ado, here come the next summaries.

Stay secure.

Andrei.

CCS’16 – Day 2 – 25th October 2016

“Colorful like a Chameleon: Security Nightmares of Embedded Systems”

Time Kasper gave a very entertaining invited industrial talk:

The talk was an interesting and entertaining recap of the main failures in embedded security as encountered or experimented by him (and his research partner, David Oswald).

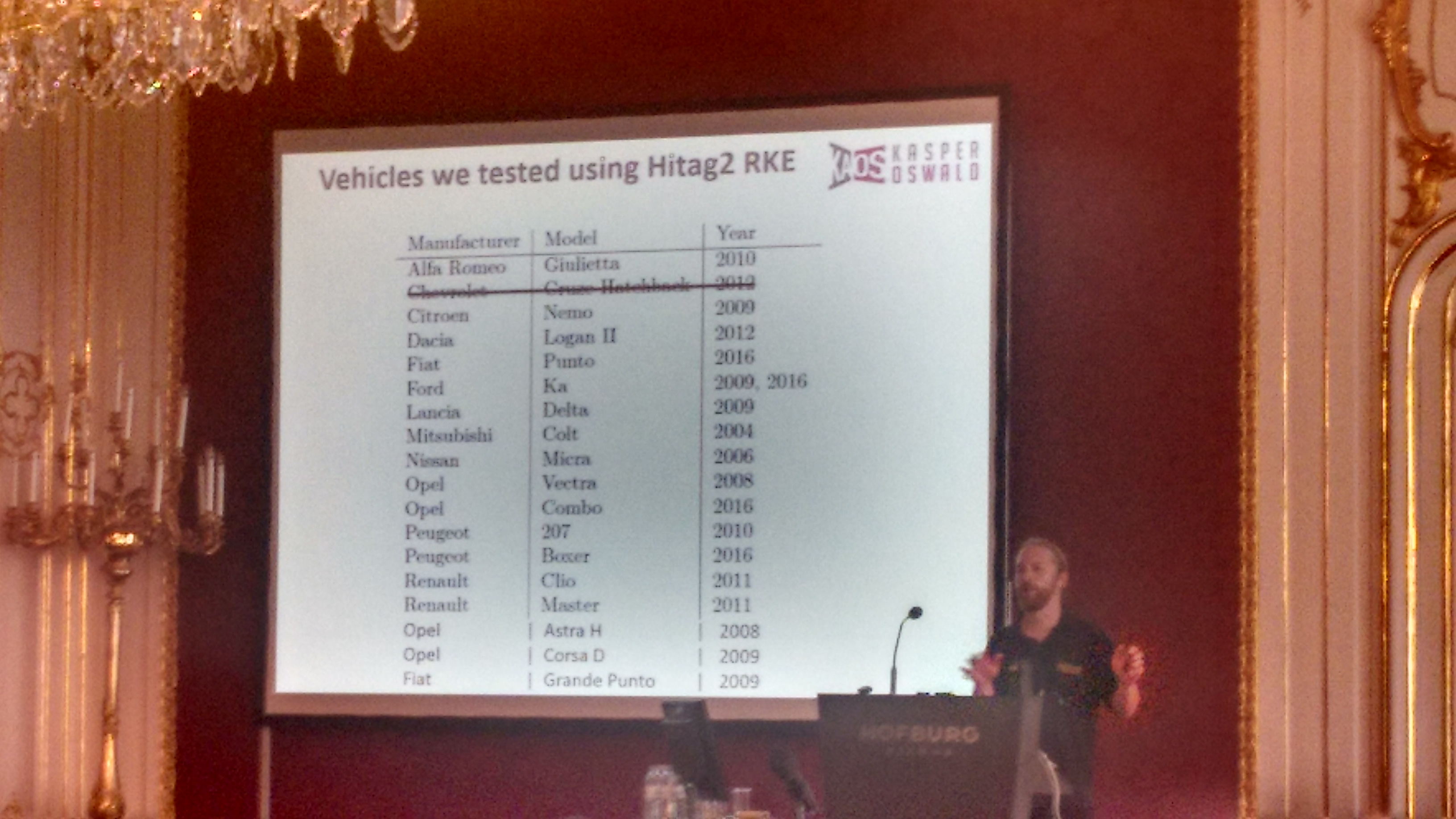

One example was of the RKE (Remote Keyless Entry) systems based on Hitag2, affecting a great deal of vehicles and auto manufacturers:

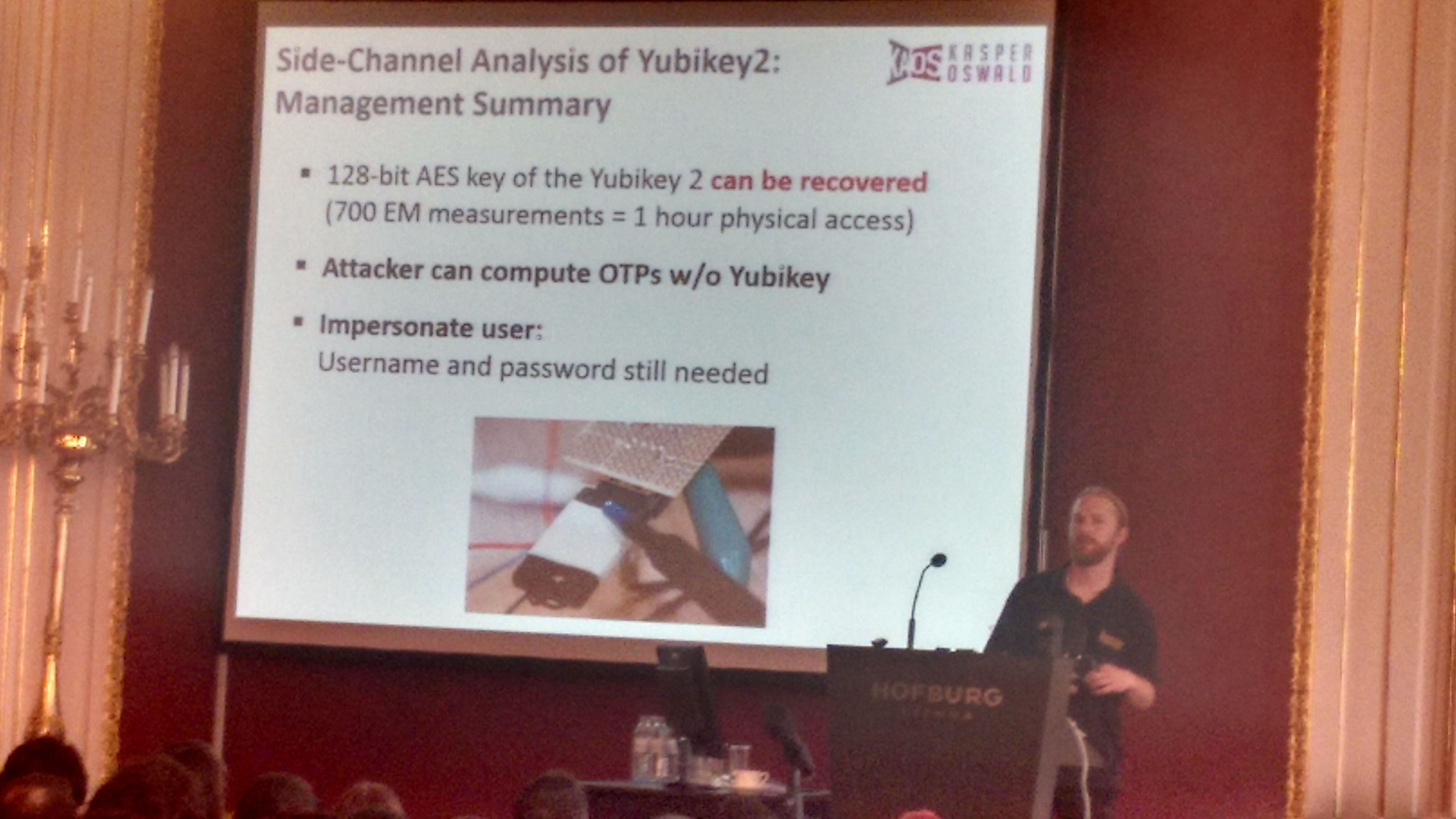

Another example was of Yubikey (the 2FA token), where a 128-bit AES key of the Yubikey2 can be recovered in around 1 hour of physical access and 700 EM side-channel measurements:

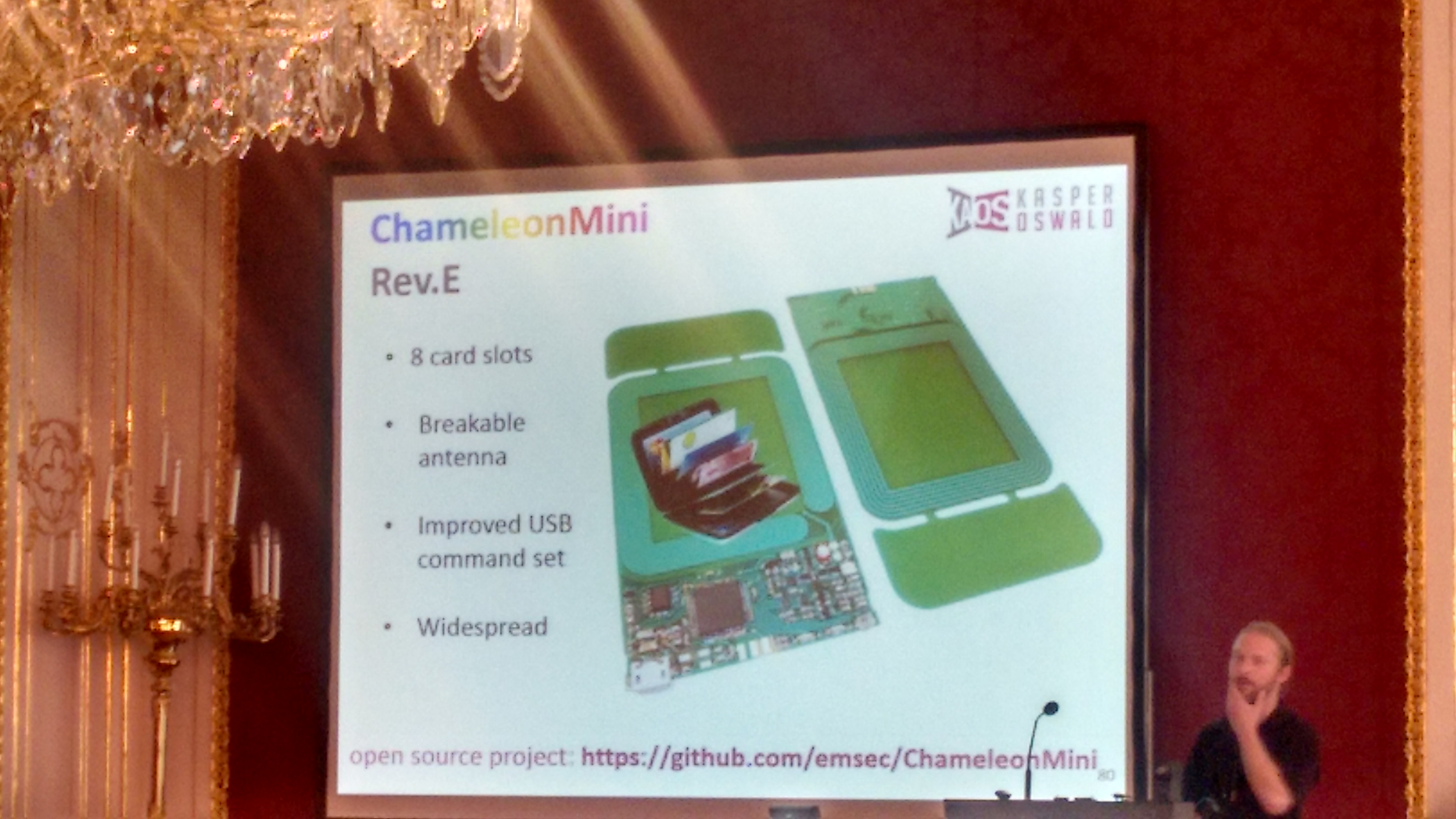

Final example of for Mifare Classic (for which I happen to have written the MFCUK https://github.com/nfc-tools/mfcuk tool in 2009, when I was only getting to know the security industry).

Now, thanks to Timo & Co. you can play easily with Mifare Classic, DesFire by using the ultra-portable sniffer/cloner/emulator called ChameleonMini:

You can also check the code and contribute back to ChameleonMini at their github. I am greatly looking forward to play with one of these pretty soon, and the price is also quite inviting.

LINKS: https://github.com/emsec/ChameleonMini

“The Misuse of Android Unix Domain Sockets and Security Implications ”

In this work, the authors present the first systematic study in understanding the security properties of the usage of Unix domain sockets . For this purpose, they also created a tool called SInspector. The SInspector tool performs vetting on apps and daemons for finding out potentially vulnerable ones.

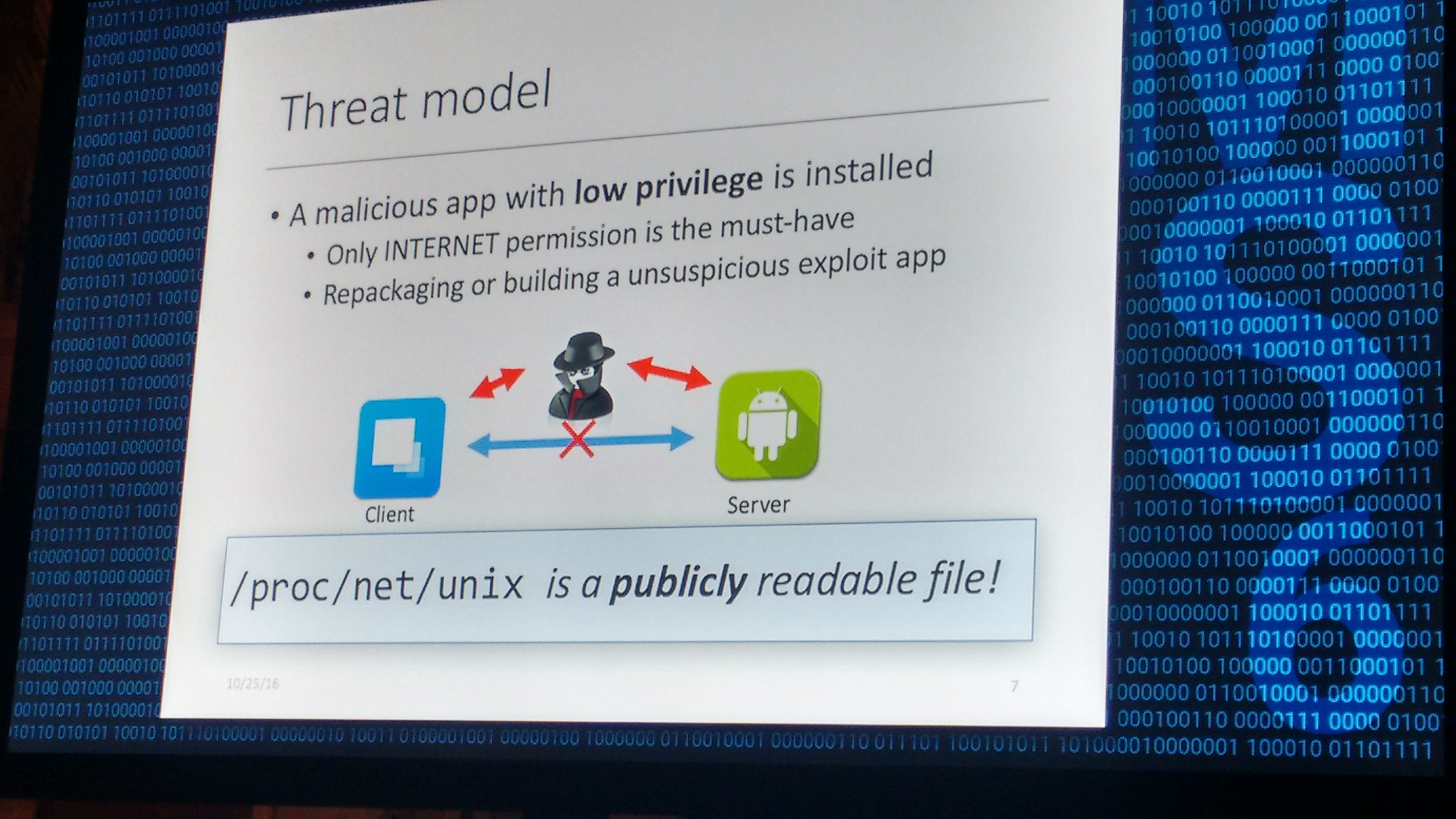

The first observation the authors make is that the /proc/net/unix is a publicly readable file. Subsequently, their threat model revolves around this observation and looks like this:

They evaluated SInspector with a total number of 14,644 up- to-date Google Play apps and 60 system daemons . They used https://apkpure.com/ to overcome Google’s limitation on downloading the apps APK. From these, 3,734 (~25%) have Unix doamin socket APIs/syscalls and their majority use ABSTRACT addresses. Only 67 apps/daemons were reported by the SInspector, and only 45 were found to be exploitable. In summary 45 out of 14,644 apps were found exploitable. On the daemons side, 9 out of 12 reported were found to be exploitable.

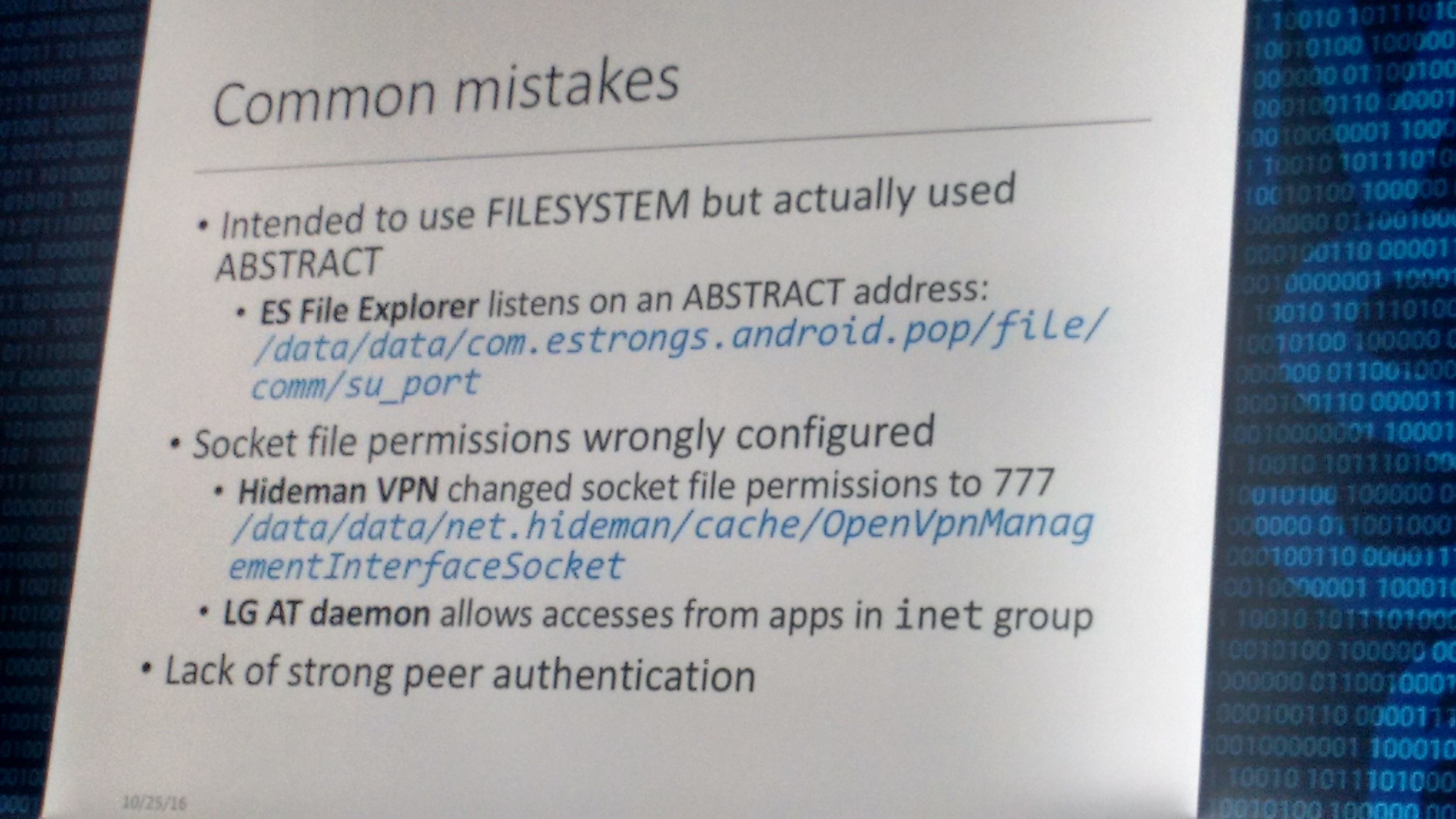

The authors also summarize the most common mistakes they found:

Overall, an interesting study and a good compromise avenue.

I personally think that in this case, providing the number of cumulative installs/downloads for both apps groups (i.e., 45 and 14,644) could have made it more clear about the overall impact. For example, one exploitable case-study is on “ES File Explorer” which has ~300 million downloads which is an impressive potentially exploitable user base. But again, the authors do not provide a number of installs for the entire set of 14,644.

LINKS: https://sites.google.com/site/unixdomainsocketstudy/home

“Prefetch Side-Channel Attacks: Bypassing SMAP and Kernel ASLR”

Daniel Gruss presented their work titled “Prefetch Side-Channel Attacks: Bypassing SMAP and Kernel ASLR”.

They propose a new avenue for side-channel attacks, namely the Prefetch Side-Channel, as a new class of generic attacks that exploit the weaknesses in the prefetch instructions.

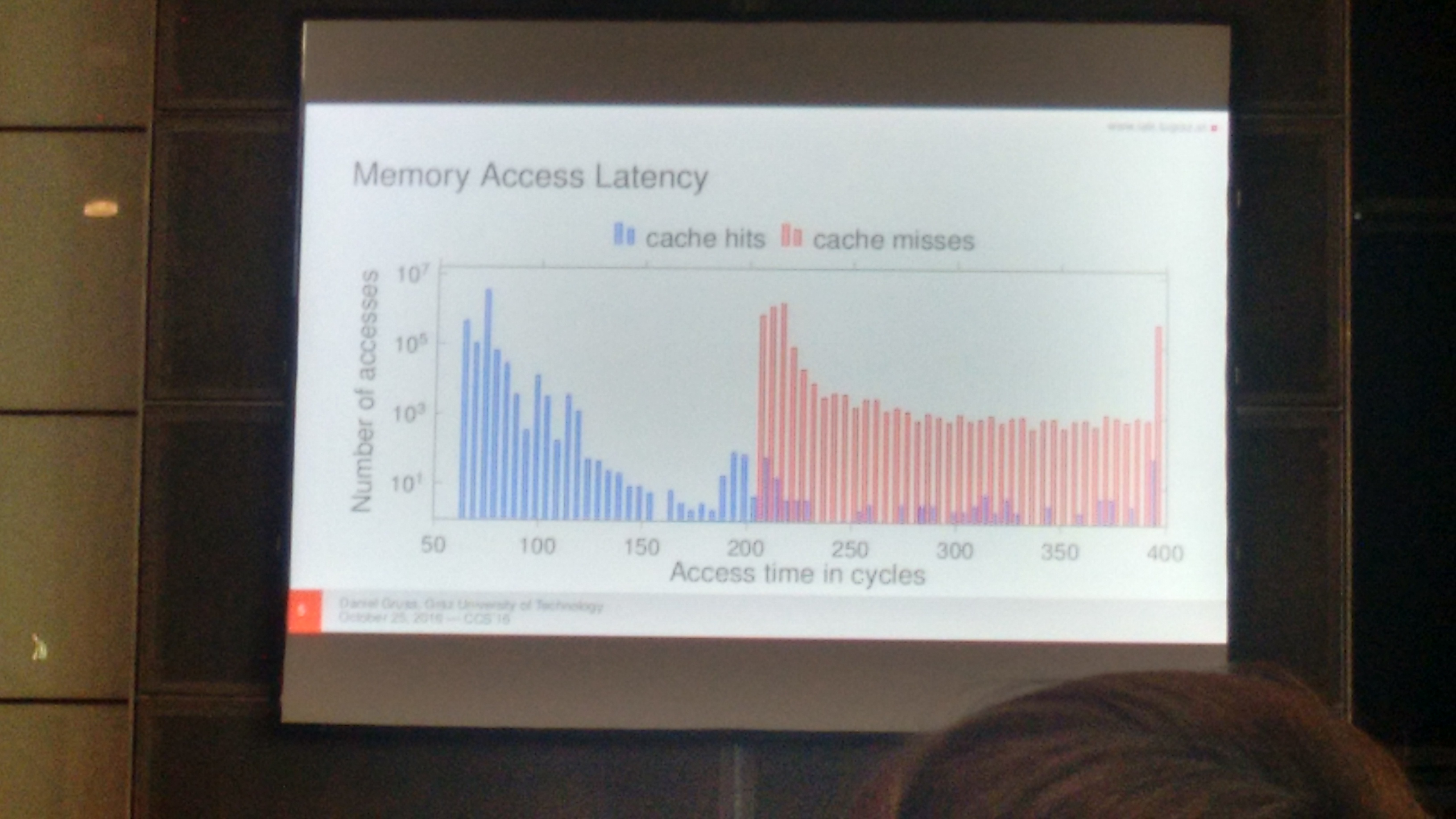

Their work starts from the observation that prefetch instructions do not check privileges and leak timing information:

Using such attacks, an unprivileged attacker can accurately map the address space and defeat KASLR. For example, an attacker can exploit this to locate a driver in kernel (=defeat KASLR, then use ROP) and translate virtual to physical addresses.

Their attacks were shown to work on a handful of Intel i3/i5/i5, Xeon, as well as ARMv8 processors (in various native and virtualized settings such as desktop, laptop, smartphone, cloud), making it a quite generic attack technique.

One countermeasure to this class of attacks stronger kernel isolation, such that syscalls and unrelated kernel threads do not run in the same address space as user threads. Implementing this requires just few changes in system kernels and incurs a penalty between 0.06% to 5% (one question from the audience was whether Linux kernel community would accept a kernel change/patch that adds a 5% performance overhead).

It looks like KASLR is a dead-horse, which is being beaten over and over again, using different techniques. There is a long post on why KASLR is likely a failure, post by spender of Grsecurity here:

https://forums.grsecurity.net/viewtopic.php?f=7&t=3367

While ASLR was invented for user-space and there it provides a good degree of mitigation (provided that the applications do not suffer dramatically from info leaks), KASLR fails to deliver the same and the many papers on the topic just demonstrate this over and over again.

To experiment with the attacks, you can check their github

LINKS: https://github.com/IAIK/prefetch

“Breaking Kernel Address Space Layout Randomization with Intel TSX ”

Yeongjin Jang presented the paper “Breaking Kernel Address Space Layout Randomization with Intel TSX”.

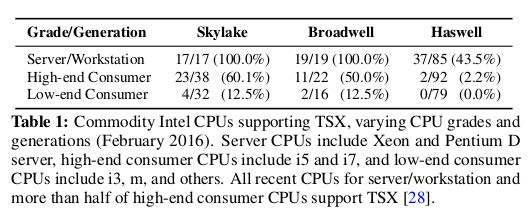

They introduce a highly stable timing attack against KASLR, codenamed DrK (De-Randomize Kernel address space). Their attacks is based on the observation that (some) Intel CPUs support what is called TSX (Transactional Synchronization eXtension).

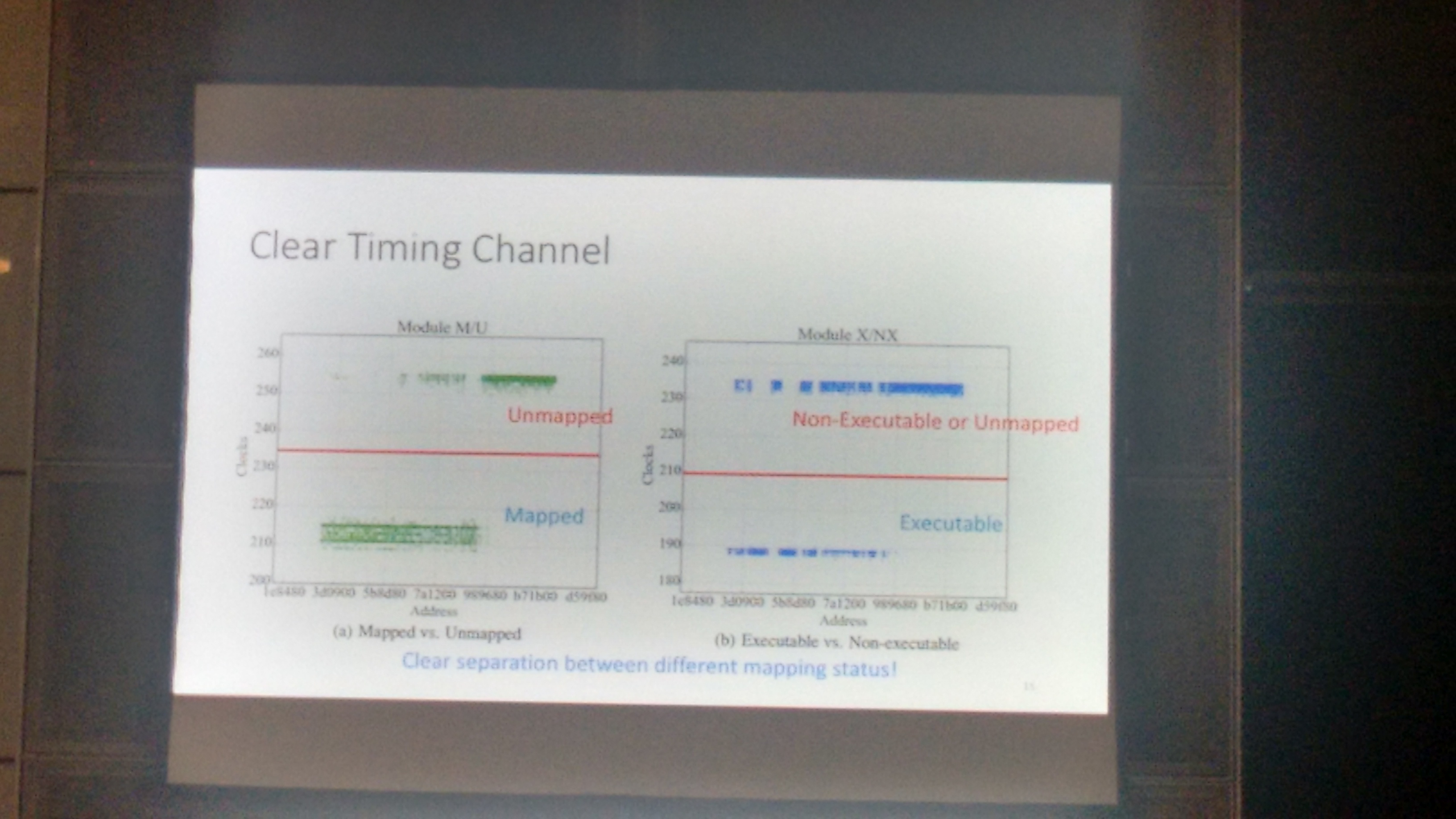

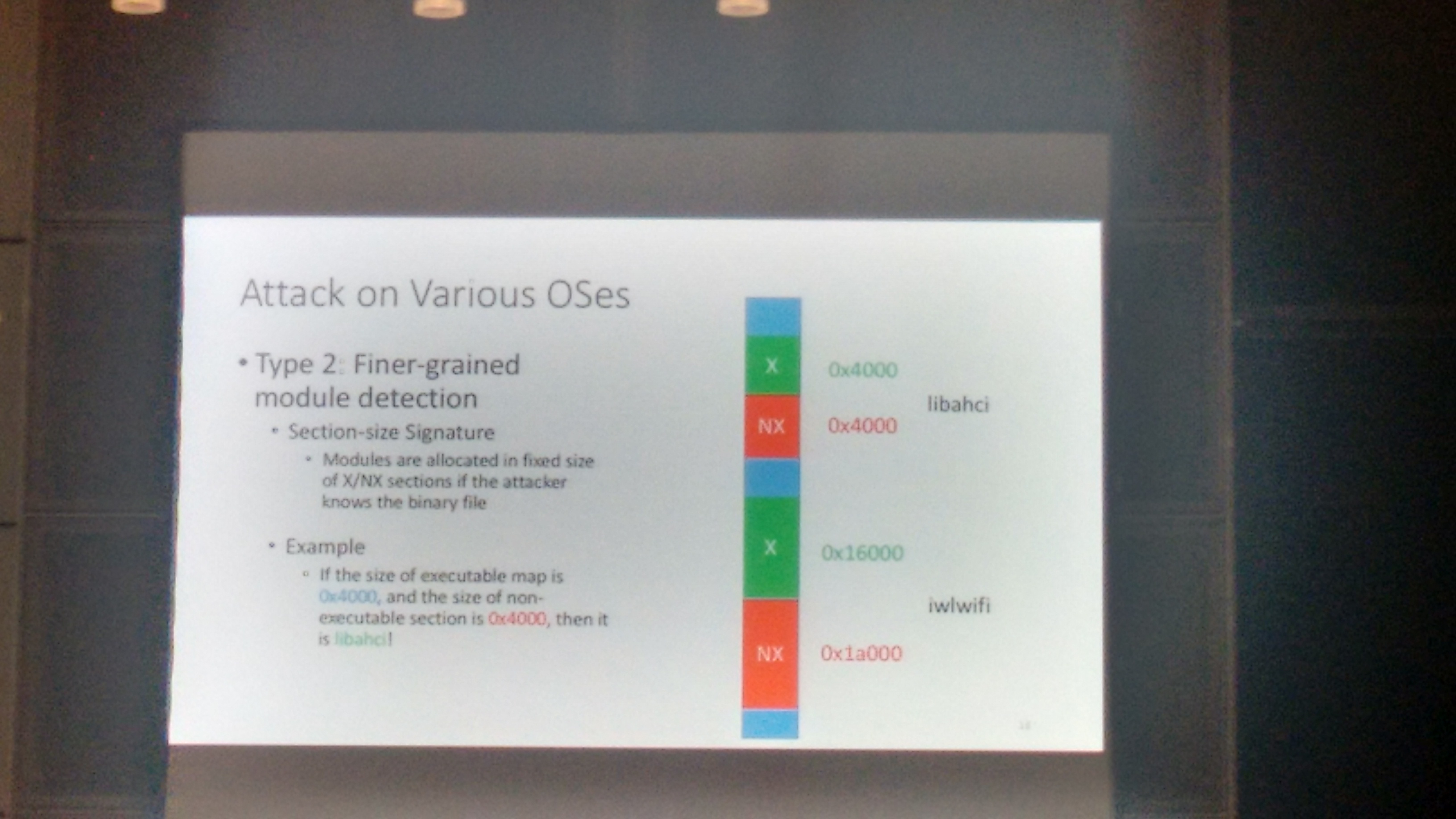

A design feature of the TSX is that it aborts a transaction without notifying the underlying kernel. This behavior happens even when the transaction fails due to a critical error (e.g., page fault, access violation), and which traditionally requires kernel action. By using this “feature”, it can be determined with precise timing the mapping status (i.e., mapped versus unmapped) and execution status (i.e., executable versus non-executable) of the privileged kernel address space.

The fact that the support is in the CPU/hardware, it makes this technique OS-independent, and was shown to work on all major OSes. One limitation though, it works only on Intel CPUs.

As for countermeasure, it seems non-trivial. CPU modifications/recalls are expensive, so basically infeasible. Turning off the TSX from software/firmware/microcode is also non-trivial, but potentially doable (microcode updates?). A solution to be tested in the long run is the use of coarse-grained timers.

It looks like KASLR is a dead-horse, which is being beaten over and over again, using different techniques. There is a long post on why KASLR is likely a failure, post by spender of Grsecurity here:

https://forums.grsecurity.net/viewtopic.php?f=7&t=3367

While ASLR was invented for user-space and there it provides a good degree of mitigation (provided that the applications do not suffer dramatically from info leaks), KASLR fails to deliver the same and the many papers on the topic just demonstrate this over and over again.

To experiment with the attacks, you can check their github

LINKS: https://github.com/sslab-gatech/DrK

“A Systematic Analysis of the Juniper Dual EC Incident”

Jacob Maskiewicz presented the paper “A Systematic Analysis of the Juniper Dual EC Incident”.

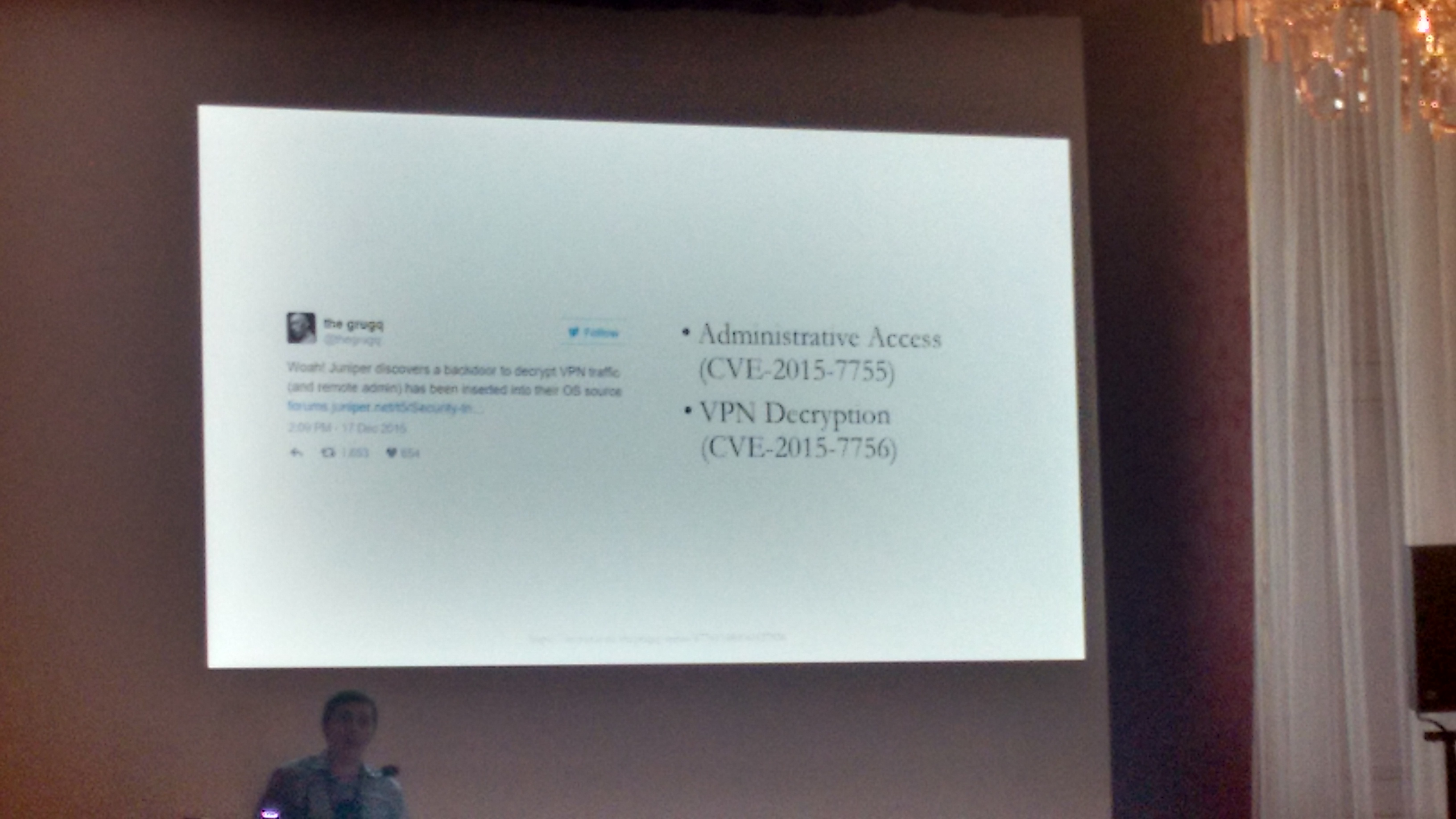

This research was motivated by the Juniper Networks disclosure (in December 2015) about multiple security vulnerabilities in their ScreenOS stemming from unauthorized code in their OS. This paper is a result of systematic and independent analysis of randomness and Dual EC implementation in ScreenOS, and validation of vulnerabilities allowing passive VPN decryption.

Some of the initial online posts:

and findings which prompted more attention to the issue:

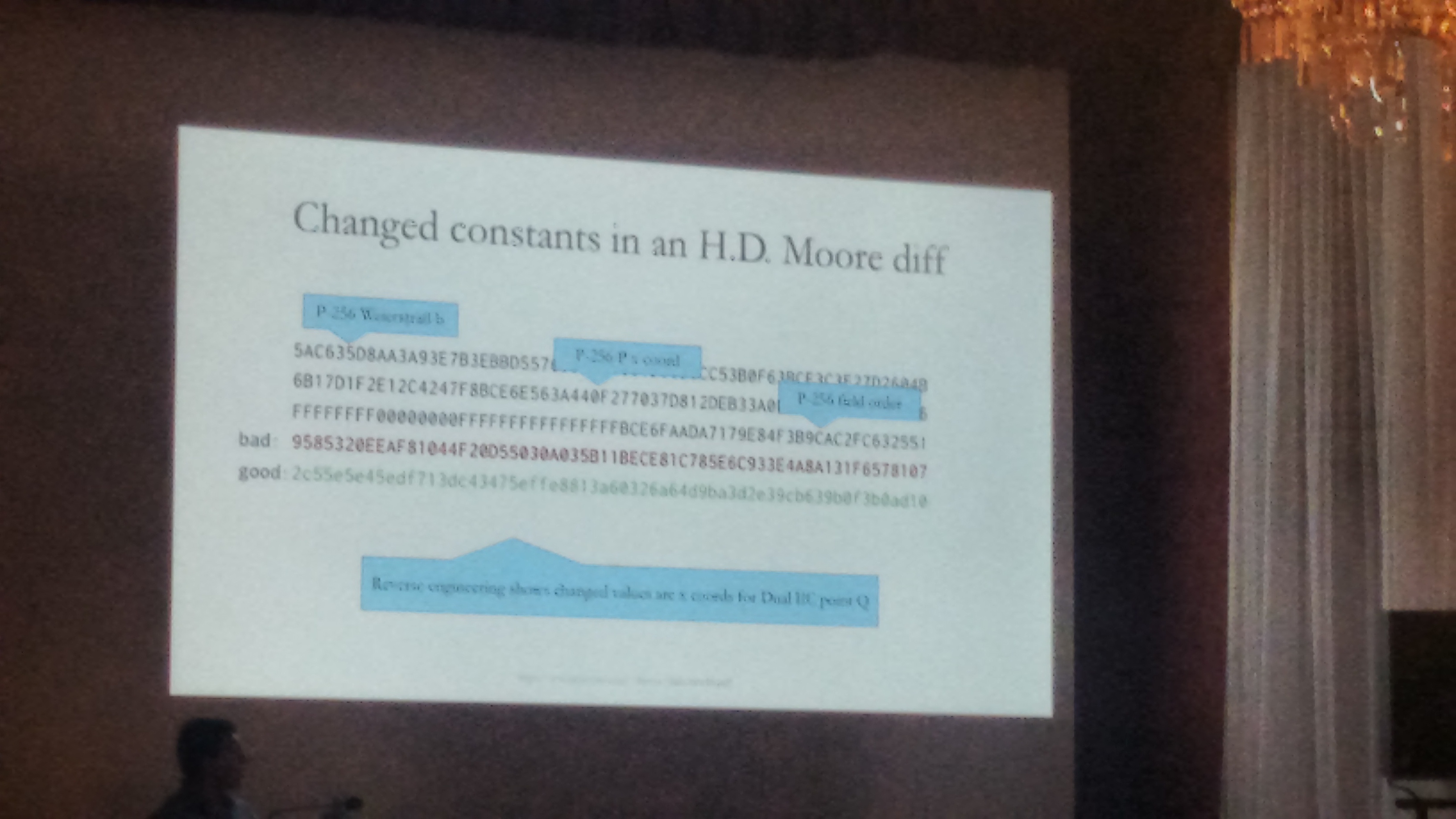

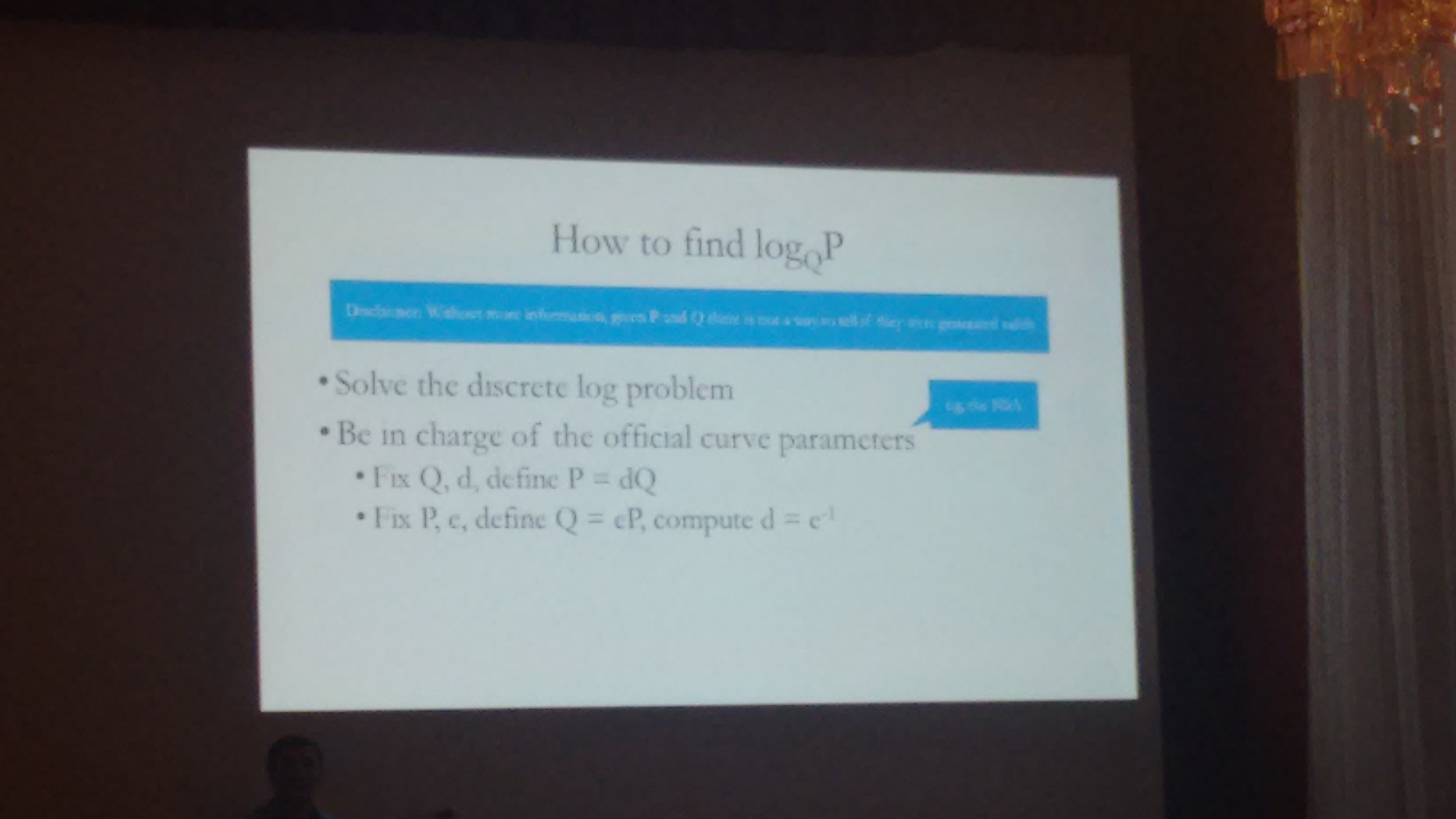

Dual EC is known to be insecure if the attacker can choose elliptic curve parameters (P, Q). Now, the H.D. Moore diffs show the change of the Q parameter value. There are two problems with the NSAdesigned Dual EC PRNG.

First, if an attacker knows the discrete logarithm of one of the input parameters (Q), and is able to observe few bytes from the PRNG, can infer the internal state of the PRNG and therefore predict all future output.

Second, given a parameter value of Q, it is impossible to tell whether the one who chose this value also knows the value of its discrete logarithm which brings us to the first problem above.

Therefore, the “unusual” change in the Q parameter value in the ScreenOS firmware is prompt for suspicion.

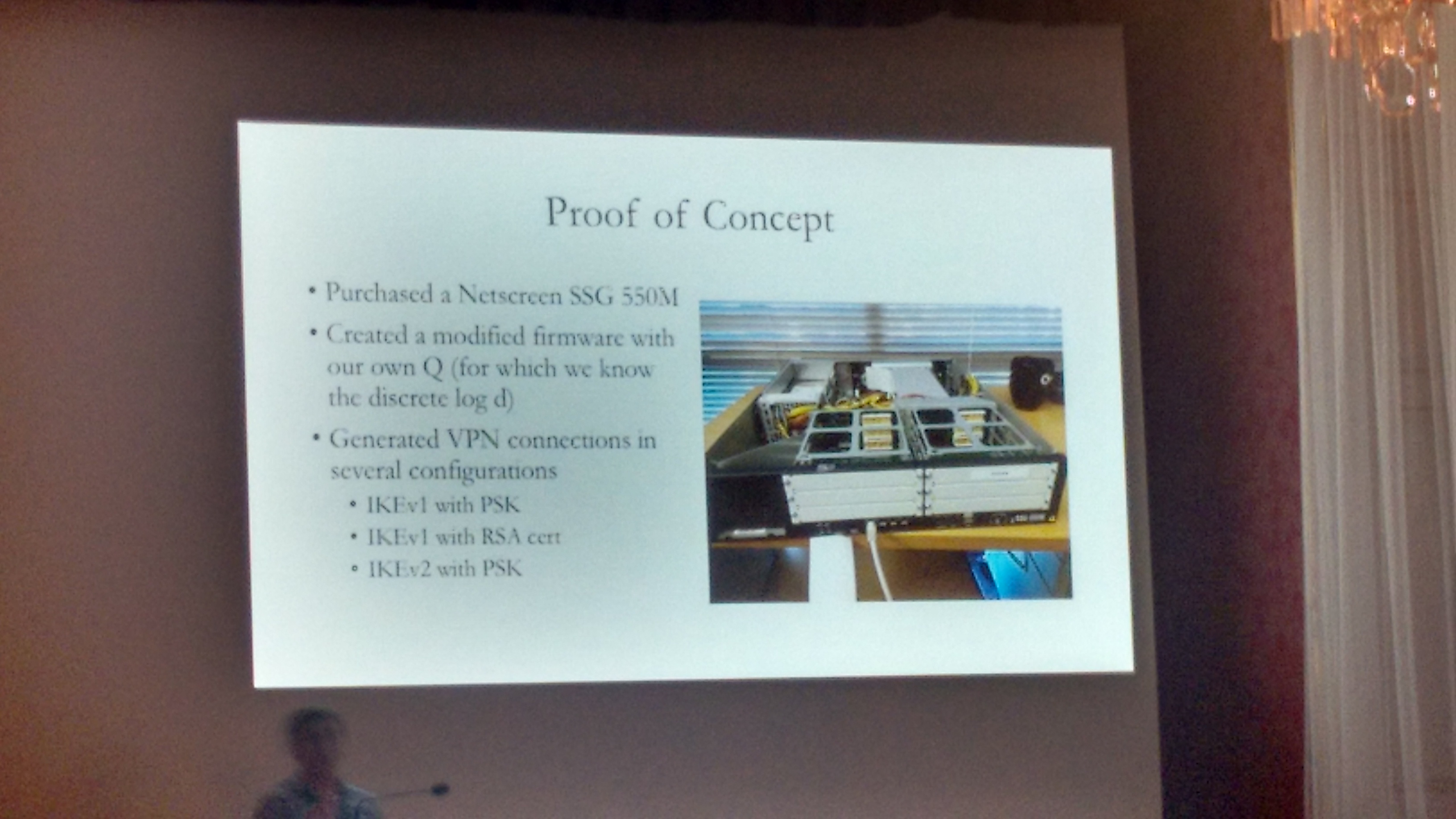

To test their theories, findings and proof of concepts, they acquired a Netscreen SSG 550M, patched firmware with their own Q (for which they know LOGq(p)), and then generated VPN connections in multiple configurations:

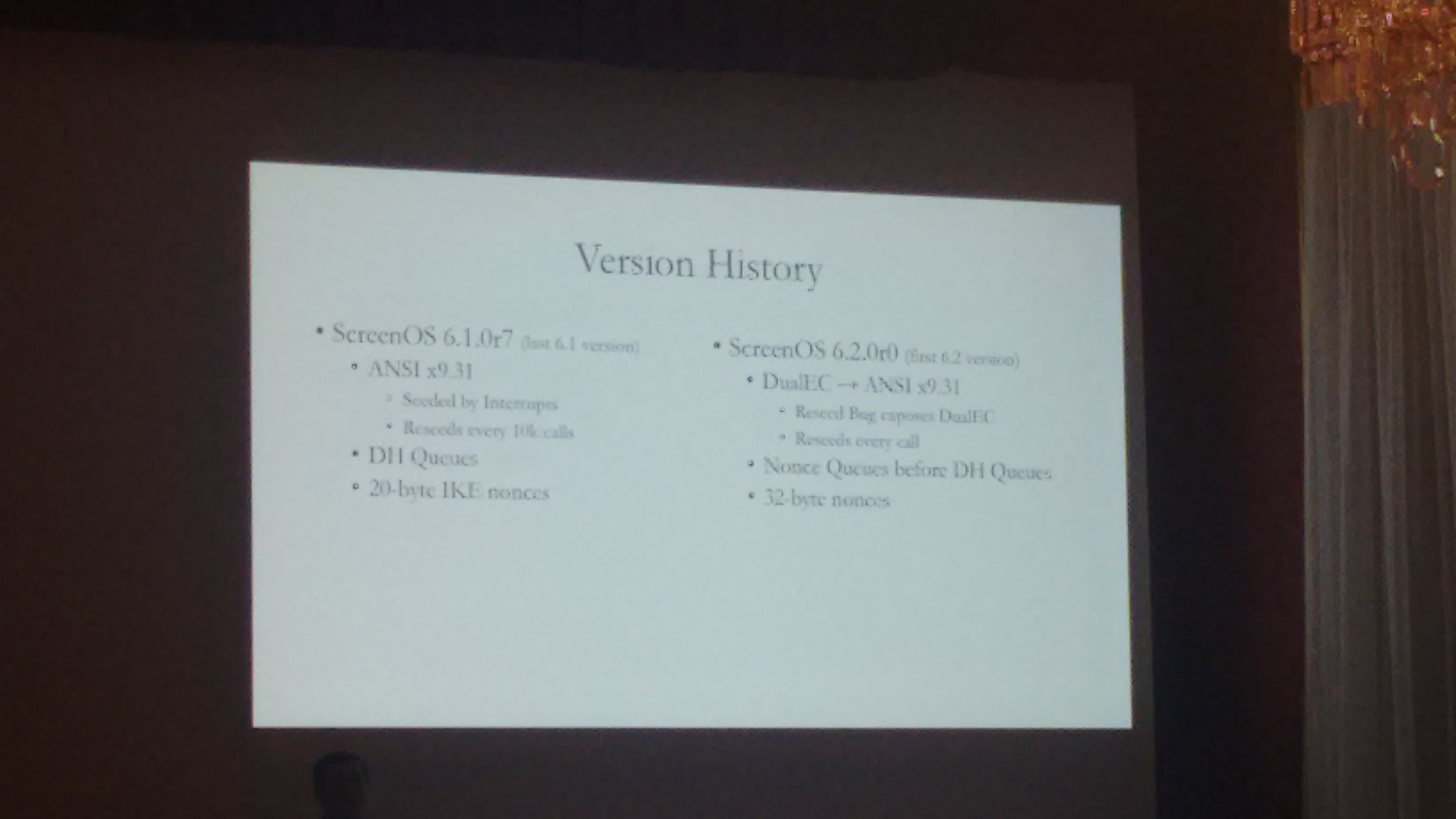

In summary, they identified a handful of changes (Listing 1 in their whitepaper) to both PRNG and IKE implementations between ScreenOS 6.1 and 6.2 that greatly enables IKE/IPsec for state recovery attacks on the Dual EC generator. The addition of these identified changes at the very same time with the addition of Dual EC, create perfect conditions to enable a very successful single-handshake exploit against IKE implementation in ScreenOS:

LINKS: https://github.com/hdm/juniper-cve-2015-7755

“Scalable Graph-based Bug Search for Firmware Images”

Heng Yin presented the paper “Scalable Graph-based Bug Search for Firmware Images”.

They start from the observation that finding vulnerabilities in IoT/embedded devices is more crucial now than ever: exponential growth of IoT ecosystem, unprecedented consequences for security breaches, and traditional anti-virus policies are infeasible. (quite similar to observations we made in our www.firmware.re papers “A Large Scale Analysis of the Security of Embedded Firmwares” and “Automated Dynamic Firmware Analysis at Scale: A Case Study on Embedded Web Interfaces”).

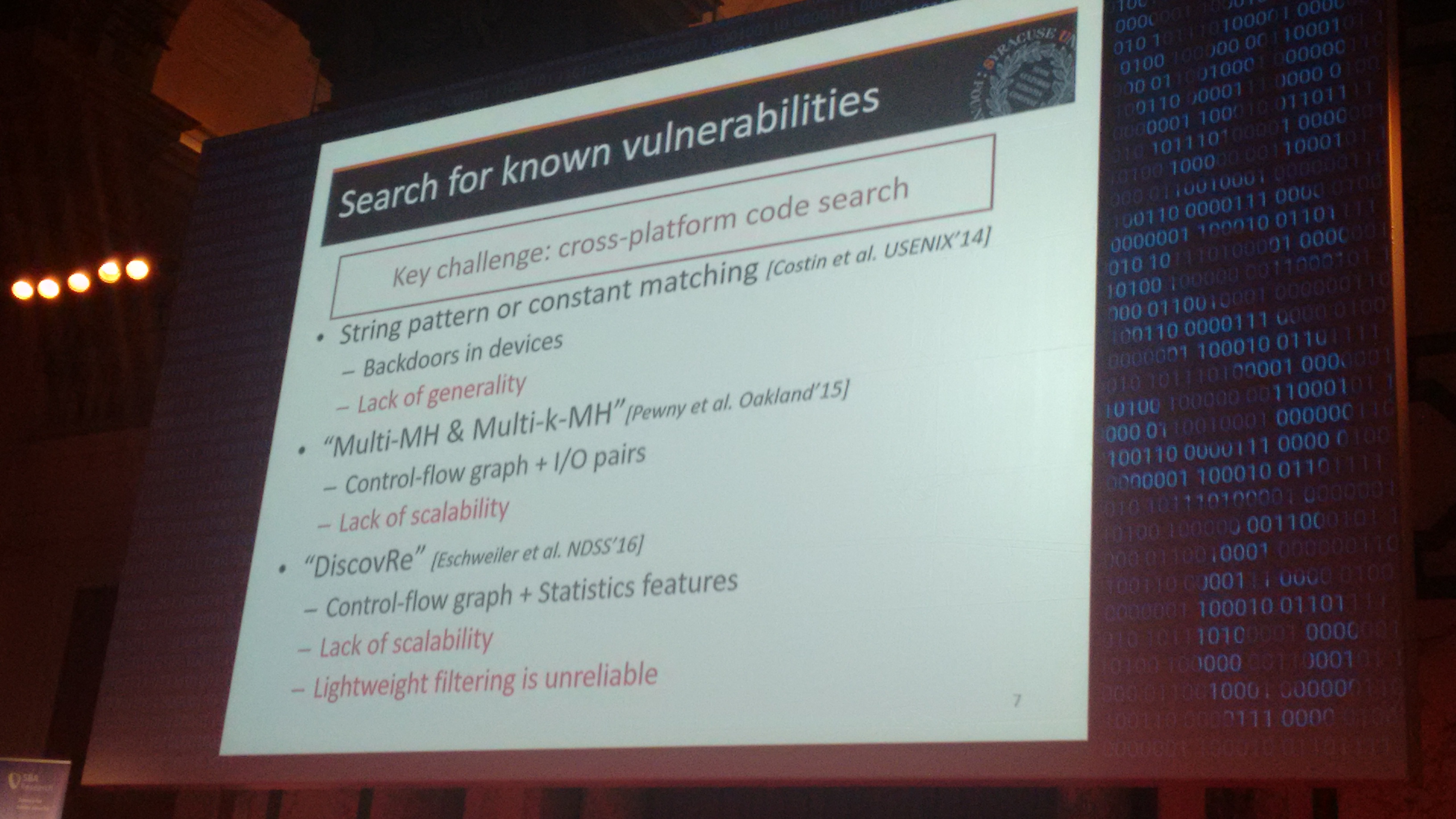

They identify as a key-challenge the cross-platform code search, that was partially addressed in previous works:

They identify as a key-challenge the cross-platform code search, that was partially addressed in previous works:

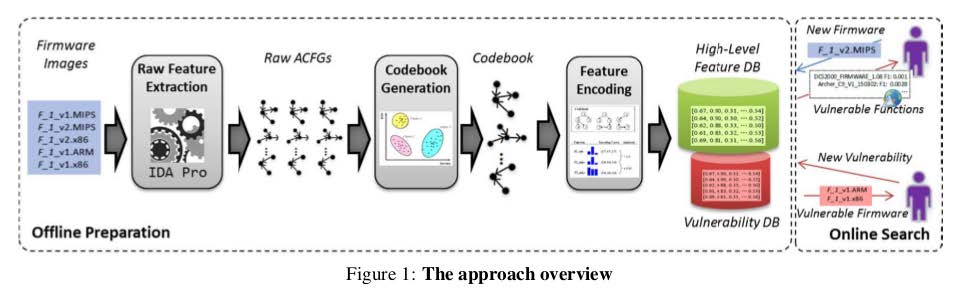

In their approach (which is a static analysis approach), unlike existing approaches that conduct code searches based upon raw features (CFGs) from the binary code, they convert the CFGs into high-level numeric feature vectors. Such an approach is told to be more robust to code variation across CPU architectures, and can achieve real-time search performance by using specific hashing techniques.

An overview of their approach is as follows:

Their experiments target three main architectures (x86, ARM, MIPS) and were evaluated on 8,126 firmware images (from 26 vendors) which were decompiled into a total of 420 million functions. Their evaluation shows that on this dataset, the bug search query can finish in less than 1 sec.

“Program Anomaly Detection: Methodology and Practices ”

Xiaokui Shu and Danfeng Yao presented an 1-hour tutorial session on “Program Anomaly Detection: Methodology and Practices ”.

The goal of this tutorial is to give an overview of program anomaly detection, which is the process of analyzing normal program behaviors and discovering abnormal executions which may be caused for example by attacks, bugs, misconfiguration, or unusual program usage patterns.

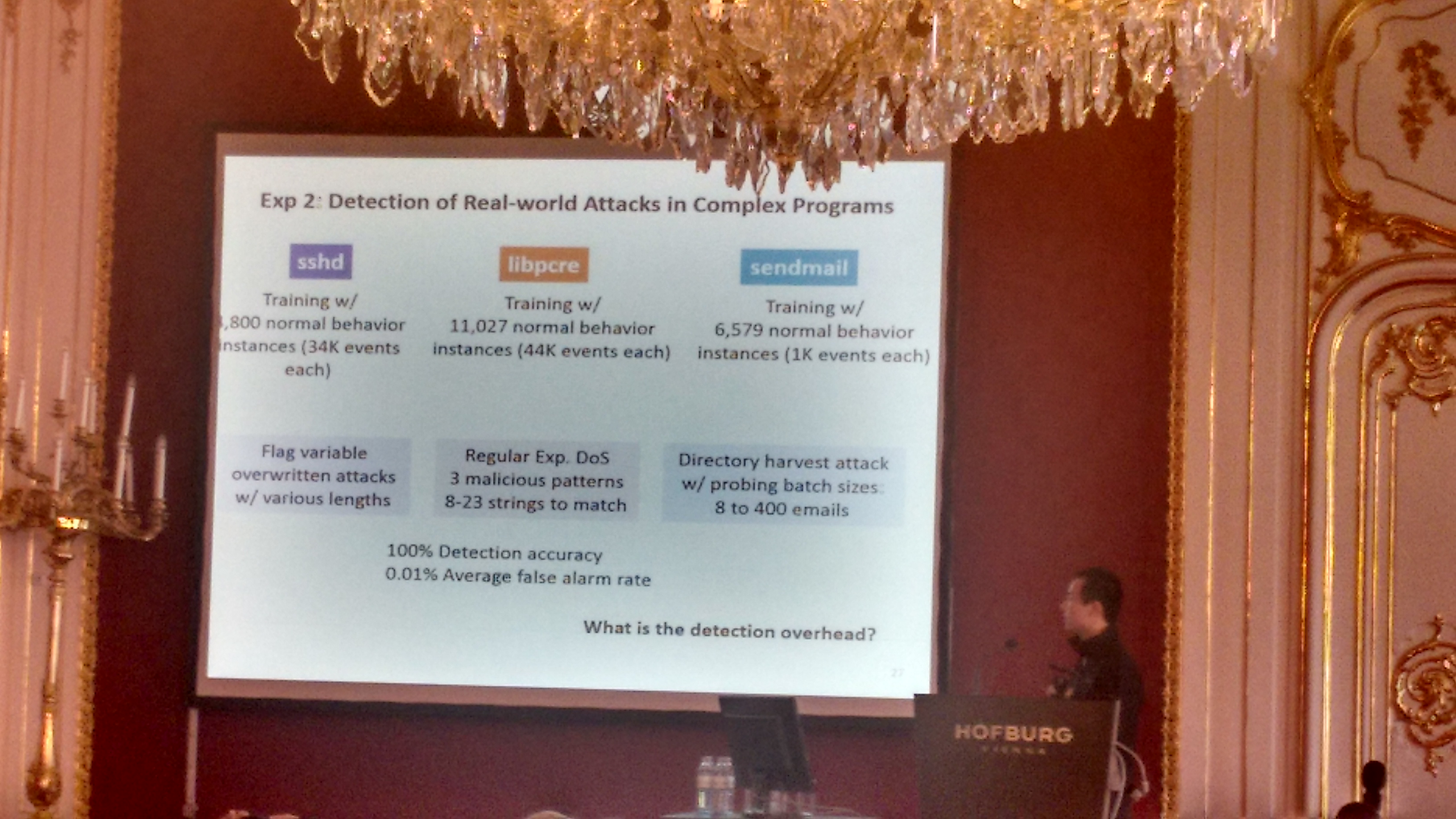

For example, they show some examples of detection of real-world attacks in complex programs, such as sshd, libpcre, sendmail. Their approach achieves 100% detection accuracy and a quite low 0.01% average false alarm rate.

Their approach is to use a probabilistic, (execution?) path-sensitive, locally-analyzed program anomaly detection based on HMM (Hidden Markov Models).

The actual hands-on did not take place because of the time constraints and because it was a shared lab host where some person already altered the environment beyond being usable even by the presenters :-/…

You if you want exercise the program anomaly detection on your own, there are plenty of exercises in the links below.

LINKS: https://github.com/subbyte/padlabs

ssh ccs2016@parma.cs.vt.edu -p 2222

password: HofburgV6