During a recent research project we performed an in-depth security assessment of Microsoft’s virtualization technologies, including Hyper-V and Azure. While we already had experience in discovering security vulnerabilities in other virtual environments (e.g. here and here), this was our first research project on the Microsoft virtualization stack and we took care to use a structured evaluation strategy to cover all potential attack vectors.

Part of our research concentrated on the Hyper-V hypervisor itself and we discovered a critical vulnerability which can be exploited by an unprivileged virtual machine to crash the hypervisor and potentially compromise other virtual machines on the same physical host. This bug was recently patched, see MS13-092 and our corresponding post.

Continue reading “Exploiting Hyper-V: How We Discovered MS13-092”

Tag: Hyper-V

A First Glance – RA Guard Support in Hyper-V 3.0

Last week I read about the new networking features of the integrated vSwitch of Hyper-V 3.0. I was quite surprised that RA Guard will be natively supported and was curious about implementation and functionality. If you don’t know how RA Guard works, I recommend reading our previous blog posts here, here, here, here and here, or have a look at our workshop at Troopers12.

I downloaded Windows Server 2012 RC to do some practical testing. Since my girlfriend was working the whole weekend, I had plenty of time to play around with all that stuff without risking trouble 😉

I installed the RC and Hyper-V role on one of our lab systems.

The basic setup looks as follows:

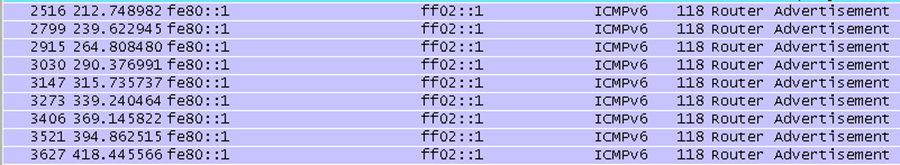

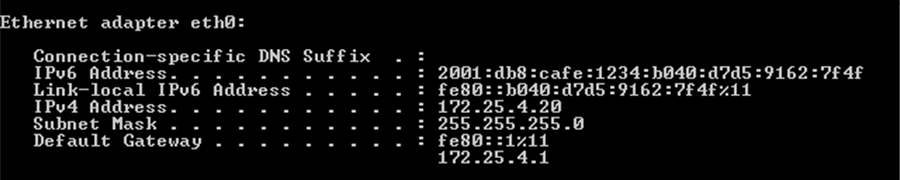

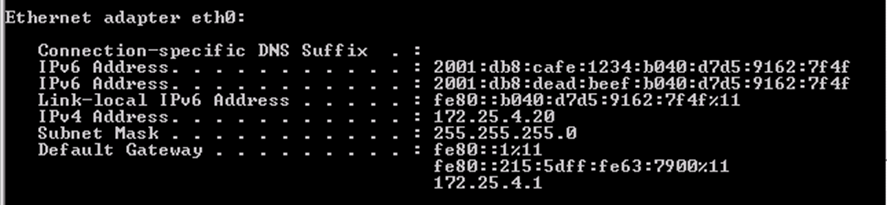

Two VMs were installed (one Windows 7 box and one Backtrack 5) and connected to the same vSwitch and were part of the same layer-2 domain (as RAs only matter in a layer-2 domain). A layer-3 switch was the legitimate router sending RAs so that the Windows 7 box configured appropriately. All tests were done with the THC-IPv6 suite from Marc Heuse. The IPv6 configuration of the Windows 7 box looks as follows:

Before starting my tests, I wanted to verify that all is working correctly. So I began sending rogue RAs from a Backtrack VM.

Before starting my tests, I wanted to verify that all is working correctly. So I began sending rogue RAs from a Backtrack VM.

![]() Nothing special here, the Windows 7 box received the RA and configured an IPv6 address from the supplied prefix.

Nothing special here, the Windows 7 box received the RA and configured an IPv6 address from the supplied prefix.

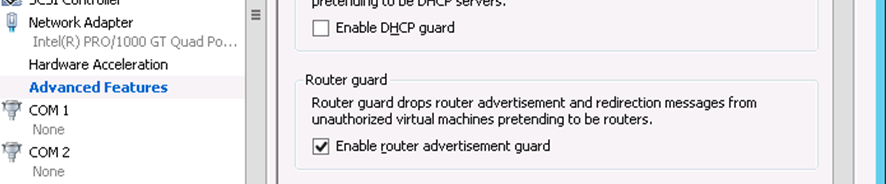

After the verification succeeded I enabled RA Guard in the settings of the network adapter on the Backtrack VM.

After the verification succeeded I enabled RA Guard in the settings of the network adapter on the Backtrack VM.

To structure the tests a little bit, I created a small test plan on what and how I wanted to test:

To structure the tests a little bit, I created a small test plan on what and how I wanted to test:

Test 1 – Sending Rogue RA’s with RA Guard enabled

The first test included sending of (non-fragmented) RAs with RA Guard enabled on the VM.

Test 1 – Results

Again I started to send Rogue RAs like I already did before.

As I expected, the RAs got filtered by the vSwitch and are not seen on the Windows 7 box (only the legitimate RAs from fe80::1).

Consequently, the IPv6 configuration did not change.

Test 1.1 – Sending Fragmented Rogue RAs with RA Guard enabled

In the next test I wanted to figure out how the vSwitch handles fragmented RAs with a ICMPv6 header being only present in the second fragment.

Test 1.1 – Results

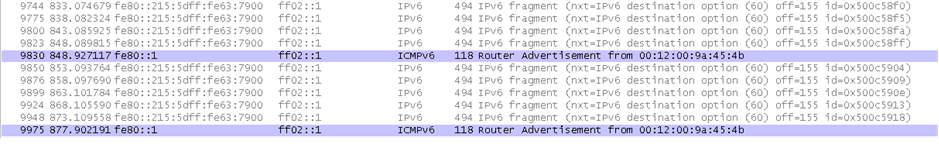

I started to send fragmented RAs with an additional Destination Header to force fragmentation.

![]() Interestingly, the first fragment with the destination header passed the vSwitch and arrived at the Windows 7 box. However, the second fragment with the ICMPv6 header got filtered by the vSwitch. You can see the legitimate RAs in between the fragments.

Interestingly, the first fragment with the destination header passed the vSwitch and arrived at the Windows 7 box. However, the second fragment with the ICMPv6 header got filtered by the vSwitch. You can see the legitimate RAs in between the fragments.

Like in test before, the IPv6 configuration of the client did not change and the received fragment did not have any impact on the CPU load of the box.

Like in test before, the IPv6 configuration of the client did not change and the received fragment did not have any impact on the CPU load of the box.

Interim Conclusion

The RA Guard implementation of the vSwitch in Hyper-V reliably filters router advertisements from a VM whether or not they are fragmented. This is good thing. Unfortunately I couldn’t find any entries in the event viewer (or elsewhere) indicating that a VM started to send RAs. This just hides the problem without really addressing it. As an administrator of a virtualized environment I would want to know when a VM starts to send RAs, in order to take appropriate measures.

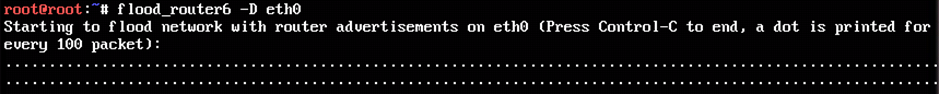

Test 2 – Flooding RA’s with RA Guard Enabled

In the next test, I wanted to find out how the vSwitch (or better the physical Hypervisor) behaves when a lot of RAs are generated by a VM. For this test, the flood_router 6 module was used.

I started to flood RAs from the Backtrack VM.

The RAs were filtered by the vSwitch and didn’t reach the Windows 7 Box. I got the same result in Wireshark as in the test before. Only the legitimate RAs were seen by the VMs.

The more interesting thing I noticed, was the CPU load of the physical Hypervisor jumping to around 25% (occupying a whole CPU ) as soon as I started flooding.

btw: That’s how the new task manager looks like in Windows Server 2012

I started wondering: If one VM flooding RAs can produces 25% CPU load, what happens if more than one VM starts to flood RAs? Let’s find out…

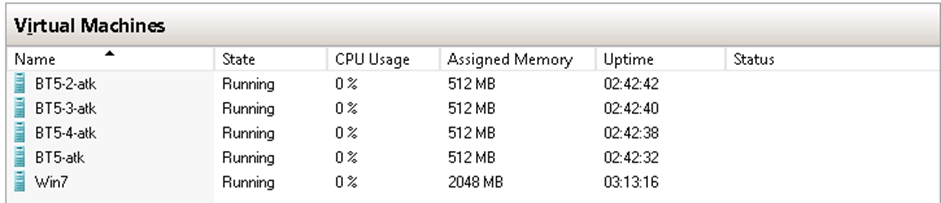

… with three additional instances of the Backtrack VM for a total of four.

I had some trouble getting all 4 working simultaneously because the flood_router6 module exited quite a few times (which is fixed in version 1.9). That’s why you see those variations in the CPU load. If looking at the point where the CPU load was nearly zero, you can see how it increases in 25% steps, based on how many VMs started to flood RAs. However, even with four VMs flooding in parallel the CPU load stalled at around 75%. But this may be only a matter of how many VMs are flooding RAs.

I had some trouble getting all 4 working simultaneously because the flood_router6 module exited quite a few times (which is fixed in version 1.9). That’s why you see those variations in the CPU load. If looking at the point where the CPU load was nearly zero, you can see how it increases in 25% steps, based on how many VMs started to flood RAs. However, even with four VMs flooding in parallel the CPU load stalled at around 75%. But this may be only a matter of how many VMs are flooding RAs.

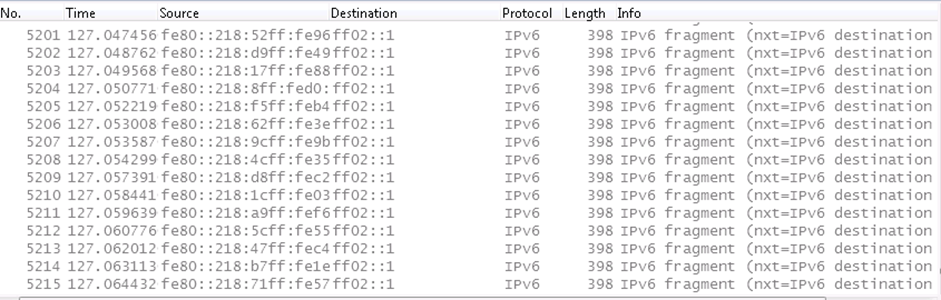

Test 2.1 – Flooding RA’s with fragmented RA Guard Enabled

As we now knew about the impact on the Hypervisor when one or more VMs start to flood RAs, I wanted to know if anything changes for fragmented RAs.

I started to flood fragmented RAs with an additional Destination Header to see what happens.

The result equals as for Rogue RAs. The first fragment hit the Windows 7 box, but the second fragment got filtered by the vSwitch.

The received fragments did not have any impact on the CPU load of the Windows 7 box.

As before, I was mostly interested in the Hypervisor’s behavior.

Interestingly, the CPU load doubles as soon as fragmented RAs are sent by one VM.

Increasing the VM count to four again at this point appears to be obvious.

The CPU load increases to 90% on average, which is quite huge for just four VMs sending a lot of (fragmented) RAs. Other VMs are not directly affected from the RAs. However, a Hypervisor running out of resources is never a good thing.

Final Conclusion –Well said Mister but does this really matter?

I think it does, because Windows Server 2012 is positioned as “cloud operating system”. As a cloud provider, you typically want to give your customers the ability to spin up as many virtual machines as they like. When a malicious customer can exhaust the Hypervisor’s resources, normal customers will be affected as well. In particular if exhausting resources can be done so easily.

Be aware that these tests were done with the Release Candidate, and it might be possible that the final version of Windows Server 2012 behaves differently. We have to wait and see ;). I will definitely run the tests again when Windows Server 2012 ships.

So what can we do to address this issue? Unfortunately I don’t have a definite answer as of right now. Without knowing the codebase it’s hard to say how this behavior could be prevented or improved. So far it’s up the vendor to address this issue and customers (or pentester’s) duty to point at it…

One thing Microsoft could do is just to disconnect the virtual NIC if too many RAs were send from a VM in order to protect the resources of the Hypervisor.

Have a great week,

Chris