From October 17th – 19th I had the chance to attend my first DockerCon Europe 2017.

The conference was very well organized and attendee focused, which could be seen by the many little details found on the conference. For example you never ran out of coffee or beverages, there was a new Hallway Track where you could meet people from all disciplines, discuss about your favorite topics and there was always a place to sit and take a break between all those interesting presentations. I had the chance to speak to very nice people from different industries, most importantly in my case on the topic security. It was nice to see how the Docker community is growing and the adoption rate is increasing, especially in companies. The main focus of the conference (especially seen in talks held by people from Docker Inc.) was the Docker Enterprise Edition.

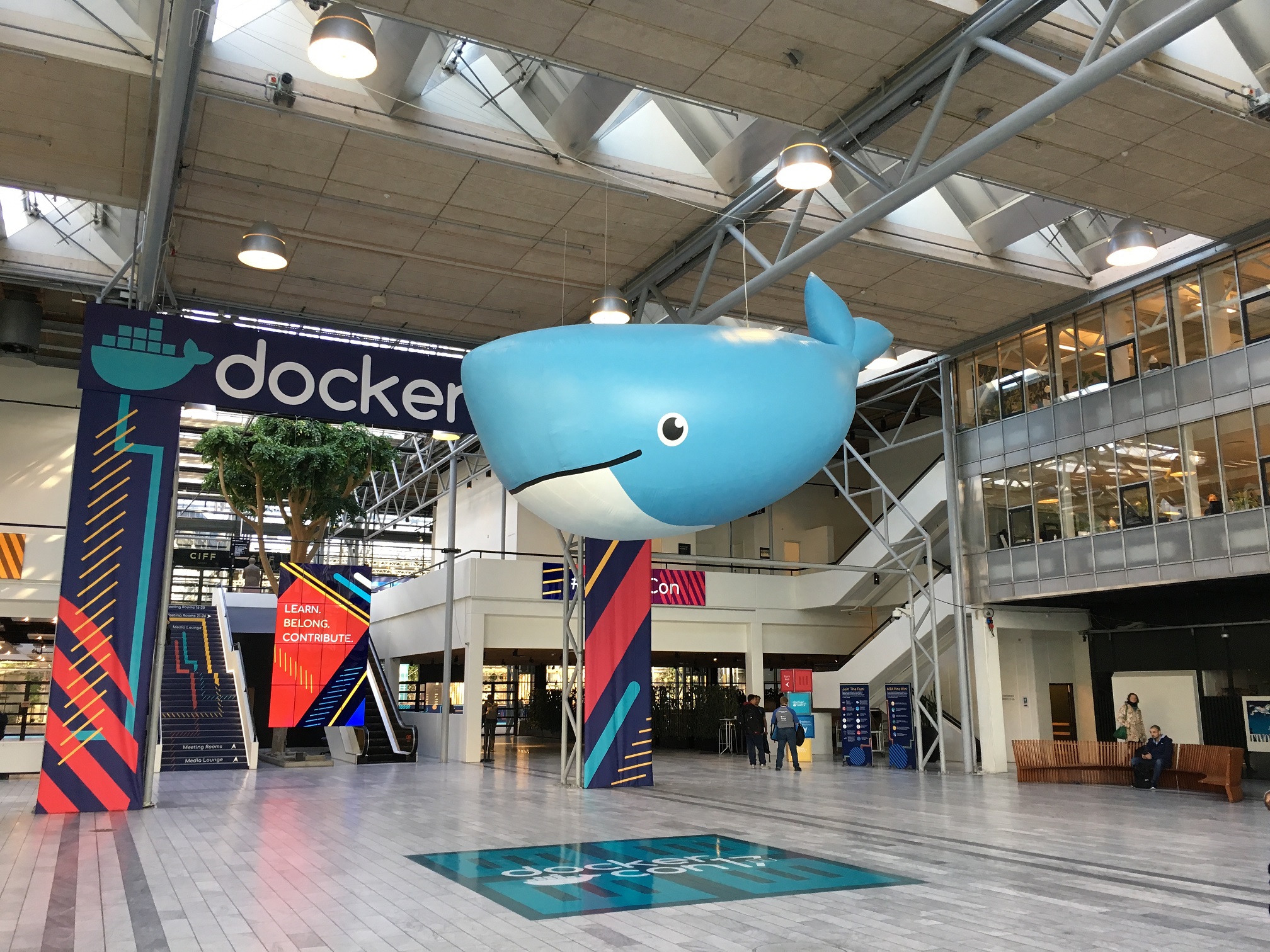

On Monday evening I arrived at Cabinn Hotel, which was my residence for the next few days. It was only about a ten minutes walk away from the Bella Center, where the conference took place. When I arrived at the Bella Center on Tuesday, the first thing I noticed were the big wide halls, which made the conference with over 2500 other attendees very comfortable and you never felt cramped because of too many people in one room (which is often the case in bigger conferences).

At 9:00 o’clock the keynote was held by the Docker CEO Steve Singh. He talked about how Docker provides one technology to modernize traditional applications and deploy them onto different infrastructure using Swarm in Docker EE. Later in the keynote, the founder and CTO of Docker, Solomon Hykes, announced the integration of a vanilla Kubernetes into the Docker Tools (Docker CE) to allow developing and local testing for Kubernetes and Swarm using the same toolset. Also Docker EE is going to get full support to run Kubernetes and Swarm in parallel. Kubernetes is going to be extended to support Docker Stacks.

The first presentation I visited was about the current status of playing-with-docker.com (PWD), a tool to play around with the docker CLI. Marcos Lilljedahl and Jonathan Leibiusky talked about how they implemented and used docker in docker, networking tweaks and custom networking plugins to allow access to Linux and Windows instances, and make them communicate with each other. Some of the pitfalls while implementing PWD were that they had to run the containers as privileged (we all know, that’s bad), which made them attackable by just mounting the host filesystem into the container.

The next talk in the row had the title „Hacked! Run Time Security“. It was a presentation by Gianluca Borello from Sysdig who presented some components of the Sysdig toolchain to monitor and troubleshoot containers. It uses a kernel module to track each system call made in the kernel, buffers them and allows to set up alerts if an anomaly occurs and report this to the central „Security Console“. He also explained a multi-step plan to secure the runtime which contained observation, service information and scale. Observation means to track everything that occurs without affecting the running containers. Service information allows to gain all necessary information about the running services and by scale he meant that the steps mentioned before should also be scalable to a complete cluster of many nodes.

The next presentation I attended was “Docker Enterprise Checklist” in the “Best Practices” track. In this presentation, the lead solutions architect Nicola Kabar from Docker talked about the problems and learnings of setting up a production environment to run applications in enterprises using Docker EE. He talked about the topics infrastructure, orchestration, replication, data storage, security scanning, networks and monitoring. He explained those topics based on use cases on which he worked for customers. At the end he mentioned the site https://success.docker.com/, which is a good source of information when it comes to using Docker CE or EE.

The talk „Docker 500: Going fast while protecting data“ (Docker 500 is a reference to Indy 500) from Diogo Mónica, the security lead from Docker Inc, was an alternative view on security by having a look at the Formula 1 (F1) and comparing security measures to IT infrastructures using Docker. He used this reference because most of the times if a terrible crash happens in F1, the driver just walks out of the car without severe injuries. The comparison can be divided into two parts: before and after the crash. Translated into the IT world, the following conclusions were given:

Before the crash:

- Use a CI pipeline to test and create repeatable builds of your application

- Use segmentation: split your application into segments and isolate them

- Practice worst case scenarios: how fast can I restore backups? How to handle a key compromise?

- Reverse uptime: replace instances with long uptimes to ensure the latest patch-level

After / while the crash:

- Be able to freeze your container fast and analyze later

- Scale automatically

- Use sandboxing by default to add a layer of defense

- Use RBAC

The second day keynote was held by the COO of Docker Inc., Scott Johnston, and was mainly about how Docker and partners support companies in containerizing old applications in the „The Docker Modernize traditional Apps POC program“. He announced a new partnership for the MTA program: IBM. He also invited the Finish Rail to stage to talk about how they migrated their infrastructure to Docker EE.

The next presentation was more technical with the title “Cilium – Kernel Native Security & DDOS” held by Cynthia Thomas. She talked about how Cilium uses BPF (Berkeley Paket Filter) and XDP (eXpress Data Path) to allow fine-grained network access control and DDoS mitigation in networks while maintaining stability and performance. Big applications often offer large APIs which can be used by other microservices (i.e. Apache Kafka). Using the the common iptables approach, rules can be defined to allow access from one microservice to another by specifying an IP and a port. If no further access control is active, on API-level this means full access or no access. Cilium allows to define policies on network level to restrict access to only certain paths of the API (L7 aware). It is based on the proven technology BPF. A Cilium agent is used to compile rules and inject the bytecode directly into the kernel. XDP is used to handle incoming packets directly in the NIC (even before the TCP stack), this also offers better DDoS mitigation since packages can be dropped directly without wasting a lot of CPU. Cilium looks like a quite interesting technology to implement security on network level, but since it is still a young technology is has to prove in production usage.

My last talk of the conference was “Building a Secure Software Supply Chain with Docker” held by Andy Clemenko and Ashwini Oruganti from Docker Inc. It is based on Docker EE. They explained a classic software supply chain and the security perspective (especially signing, scanning and promotion). With signing you can make sure an image does not get modified on its way into production and that it has taken the correct way through the CI pipeline. It also enables accountability. The next step is scanning the images for vulnerabilities. It is also possible to rebuild all child images, if a vulnerability has been detected. Based on the scan results, images can be promoted from one environment into the next, for example from development into testing into production. Promotion rules can be defined to allow automatic promotions into new environments, like for example by defining a blacklist of packages (i.e. tcpdump, nmap, …) which should not be contained in the image, or a threshold of vulnerabilities.

Cheers,

Simon