This article is about the massive BSOD triggered by CrowdStrike worldwide on July 19. Analysis and information from CrowdStrike or other sources are regularly published, completing what is expressed here. Updates may also be provided in the future.

Friday, July 19, is a day to be remembered in computing history as the day of one of the biggest BSODs (Blue Screens of Death). We have seen air traffic come to a standstill over the USA and people climbing ladders with USB sticks to update giant screens. The impact was still measurable over many days. The question on everyone’s lips is how that all happened. CrowdStrike provided, on a regular basis, an explanation for people to understand what happened. But explanations can be hard to understand, especially for one who would like to read directly within CrowdStrike’s internal wording in their publications and regarding technical driver implementation details. Also, the analysis misses some points we consider relevant for secure software development. This article discusses conclusions from this massive crash, especially the necessity to change our mindset about software. This means we should understand, document, and evaluate independently software provided by vendors to know exactly what we install on our systems and to figure out the risk that may be taken by using the software. The time of naive belief in software magic must end with a third party’s independent review of the software, analysing its reliability, security, and stability. This is an activity we have been doing at ERNW for years, especially for e.g. the German Federal Office for Information Security (BSI)1.

This article starts by explaining the reasons for the crash as given by CrowdStrike and confirmed by independent analysis. Then, it discusses the reason for the crash and the mitigations proposed by CrowdStrike to prevent such an issue from happening again. This discussion includes a review of their proposals and a discussion of what could be done in addition. At the end, the main lessons that should be learned from that incident are explained.

Explanations from CrowdStrike

Since the beginning of the case, CrowdStrike has published about the situation regularly2. In particular, the company exposed a detailed report explaining the root causes and the mitigations regarding this crash in a long report3. This effort at transparency is welcome.

CrowdStrike wording

The content of the document is complex, combining the technical operations of CrowdStrike’s EDR with the vocabulary used internally. In the following, we propose (what we hope to be) a comprehensible and cut version of it.

Internally, their EDR is composed of a Content Interpreter, a regular software written in C++ and whose primary purpose is to take Template Instances to perform telemetry and detection operations. Template Instances consist of regex content (which could be approximated by generic “detection rules”) intended for use with a specific Template Type. A Template Type contains predefined fields for threat detection, where all the fields are defined first in a Template Type Definitions file. We note that there is also a Content Validator used to evaluate the new Template Instances.

Say otherwise, a Template Type is defining a specific set of capabilities to detect a specific threat. This one is implemented through a Template Type Definitions file to define the parameters useable in a regex for a specific kind of detection (depending on the type, for instance, network detection, file activity detection, and so on). From this template, detection engineers can write matching criteria (apparently through a User Interface) consisting of what CrowdStrike mentions as “regex content.” These matching criteria are written with a set of input values, corresponding to parameters used in the context of “regex” to perform a detection. These input values can be specific to the Template Type, for instance, an IP address in the context of the network Template or the command line parameters for the process Template. These detection rules written in the Instance Template are broadcasted through a procedure called Rapid Response Content consisting of multiple Template Instances bundled together and delivered by Channel File. According to CrowdStrike, this procedure is used to “gather telemetry, identify indicators of adversary behaviour, and augment novel detections and preventions on the sensor without requiring sensor code changes.” This is not directly programming code to execute, but instead, data (generically called Sensor Content when it also includes on-sensor AI and machine learning models) used to drive the execution of the Sensor Detection Engine.

The problem came from the deployment of a specific Channel File, but the story started with the release of sensor version 7.11 in February 2024. At that time, CrowdStrike introduced in its software a new Template Type used to provide capabilities regarding Inter Process Communication (IPC), especially to detect what they claim to be novel attack techniques that abuse “named pipes4” on Windows. The IPC Template Instances are delivered via the Channel File numbered 291 (“CS-00000291-*.sys”).

CrowdStrike’s crash explanation

At the beginning of this new detection feature, the Content Interpreter (apparently implemented in CSAgent.sys) “supplied only 20 input values to match against” for an IPC Template. In a way, one could understand a regex in an IPC Template composed of a theoretical set of 21 input values. Every regex is an instance of an IPC template, supplied through an array of 20 entries (where each entry corresponds to an “input value” in the context of a given “regex”), whatever was the number of entries originally defined in the IPC Template. In other words, it means that the instantiation of an IPC Template at running time was based on a source code where the value “20” seems to have been directly hardcoded in the source code and not a dynamic value retrieved from the definition of the Template Type!

According to CrowdStrike, the problem remains unknown because “this parameter count mismatch evaded multiple layers of build validation and testing, as it was not discovered during the sensor release testing process (…). In part, this was due to the use of wildcard matching criteria for the 21st input during testing and in the initial IPC Template Instances”. Said otherwise, the original tests included a 21st input value which was not correctly considered (since only 20 input values were defined in the array allocated). Indeed, according to CrowdStrike, “the use of wildcard matching criteria for the 21st input during testing” prevented an evaluation to this 21st evaluation value, avoiding triggering any crash.

On July 19, 2024, two additional IPC Template Instances were deployed, and one of these introduced a non-wildcard matching criterion for the 21st input parameter. It means that the content interpreter had to inspect that parameter now, a parameter which was never used in previous definitions of the IPC Template. However, internal implementation still limited the number of parameters to 20. Hence, when a named pipe communication occurred, the attempt to access the 21st value produced an out-of-bounds memory read beyond the end of the 20-entries-fixed input values array, resulting in a system crash.

CrowdStrike’s mitigations

CrowdStrike provides in its post incident report, a list of mitigations they are applying to prevent any future crash. The mitigations are divided into different topics. We propose summarizing the most important ones from our point of view in this context.

- Implementation:

- The sensor compilation time (the procedure that generates the Instance Templates) validates the number of input fields in the Template Type.

- There is a check at runtime regarding the input array bounds in the Content Interpreter for Channel File 291. In addition, the IPC Template Type was updated to provide the correct number of inputs (21).

- Testing:

- There is an increase of test coverage during Template Type development. It means that test procedures now validate all fields in each Template Type, including tests with non-wildcard matching criteria for each field.

- Additionally, all future Template Types include test cases with additional scenarios that better reflect production usage, more than a static set of 12 test cases, originally selected to be representative from CrowdStrike’s point of view.

- Testing within the Content Interpreter to ensure that every new Template Instance is evaluated.

- Content Validator:

- New checks were implemented to ensure that content in Template Instances does not include matching criteria that match over more fields than are being provided as input to the Content Interpreter.

- It was modified “to only allow wildcard matching criteria in the 21st field”.

- Staged Deployment:

- Template Instances are now deployed following a logic of staged rollout.

- Additional deployment layers have been added, meaning that the deployment is divided into rings of customers, where the deployment is evaluated before enforcing it to the next successive ring.

- Solutions have been implemented to provide customers with increased control over the delivery of Rapid Response Content. Customers can choose where and when Rapid Response Content updates are deployed.

Discussion regarding CrowdStrike’s crash.

From the explanations provided by CrowdStrike, a few analyses can be conducted to inspire a few thoughts here. I would like to share a few of them with you.

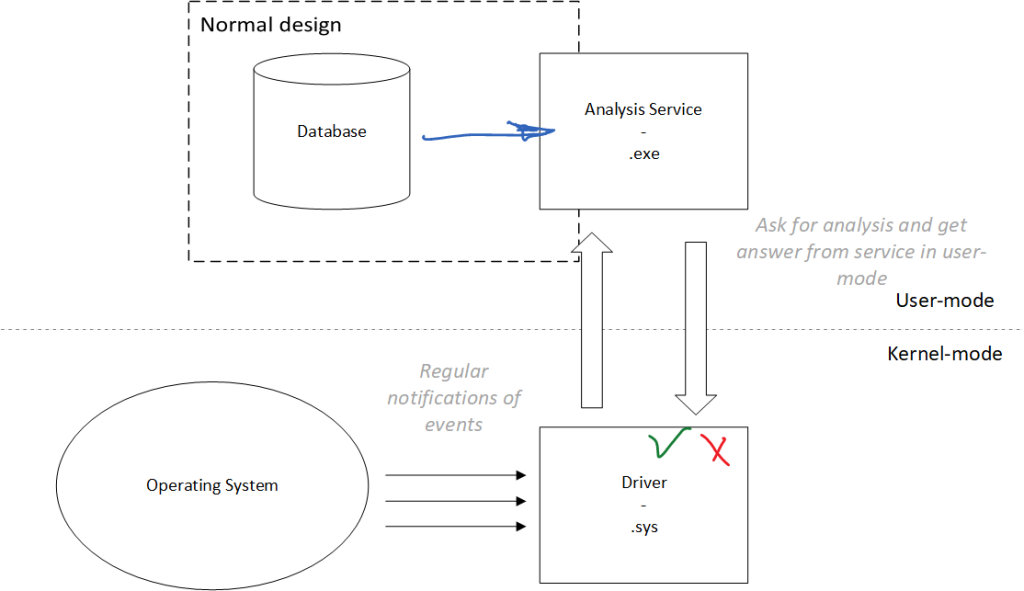

Classic Antivirus/EDR Architecture

The first concerns the general CrowdStrike ‘s software architecture. To that end, we should first explain the “classic” architecture of an antivirus/EDR. By classic, we mean how an antivirus like Microsoft Antivirus Defender works5, for instance, or simply a sample codes given by Microsoft6 to develop an antivirus for Windows. From a conceptual point of view, an antivirus can be divided into two entities for its main operations. One entity collects information and enforces the security in the system, while another one analyses it. It is a bit like the judicial system: the police do the investigation, the judge decides, and the police enforce the judge’s decision.

The first one operates from the kernel, usually as a driver, that is to say, an MZ-PE executable file whose extension is .sys. To proceed, it registers different sets of callback functions (routines for kernel developers ), such as with a WMI provider7, file filter8, or registry filter9, to name only a few. That way, the driver is fed up with all the system’s events. Most of them are directly provided to the second entity, usually implemented in a service running in user-mode. Behind this wording, it means the antivirus is a regular process running in the background to answer notifications provided by the kernel driver. This is where the “antivirus analysis” is performed. It can be a local procedure based on a local database or an online one if supported by a cloud analysis provider. Once the analysis has been performed, the answer is provided back to the kernel driver, where the decision is applied (for instance, to raise an alarm, to block an action, to set a file in quarantine, or just to let it go). A representation of this design is given in Figure 1.

Why do manufacturers choose such a design? Why does man split the information collection in kernel-mode and the analysis procedure in user-mode? Firstly, this design is chosen to apply the adage about keeping things as simple as possible, especially in the kernel of an operating system. Executing code in kernel-mode means having the highest possible privileges on the machine, interfacing directly with the hardware, and potentially allowing any bypass of security. But with great power comes great responsibility, meaning that any mistake can be catastrophic. Why? Because the kernel is the last line of defence to manage any failure. If one is not correctly managed, it means that something corrupted the kernel of the system, implying the last one cannot be reliably trusted anymore. One solution could be to continue by ignoring this issue as if nothing happened. But that would mean continuing with a system that has had a critical failure, which could result in an arbitrary corruption of the hard drive, erasing data, or doing other security-relevant harm. To avoid such a potentially more significant disaster, we prefer to stop everything and trigger an emergency stop, materialized by a BSOD. When the kernel triggers a BSOD, it chooses the lesser of two evils.

This is why we should do as few as possible in kernel-mode – words for any kernel developer to live by. Indeed, the more code we introduce in the kernel, the more we increase the probability of making a mistake (especially for junior developers or architects) and the higher the probability of BSOD. Only what is necessary must be performed in the kernel. Therefore, this is the reason why one moves the analysis in user-mode, where mistakes are not that expensive (it’s just a service crash, not the machine) and where it is easier to develop a project (the complexity is less compared to the kernel), meaning more developers are available to do it.

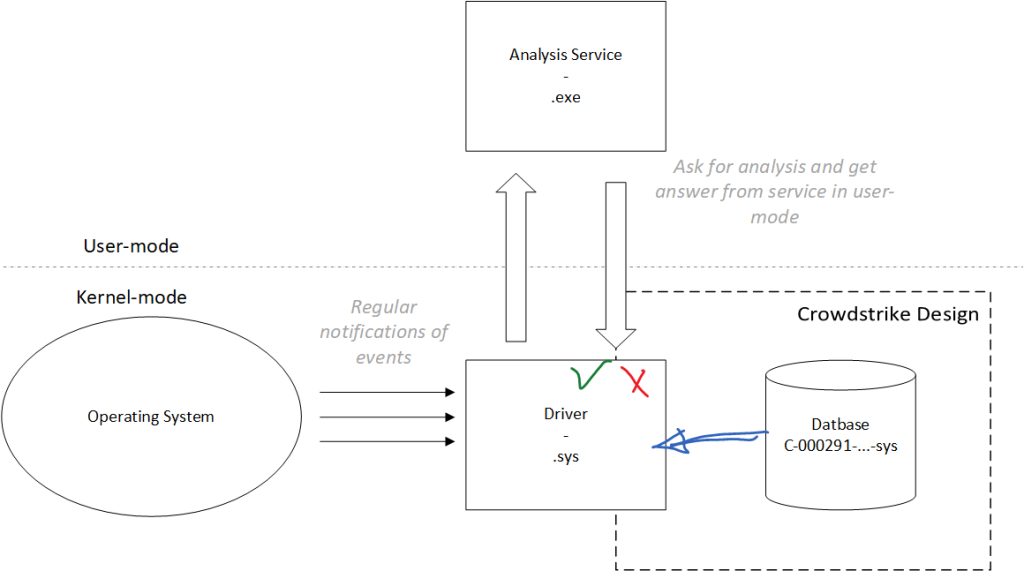

CrowdStrike’s issue and software architecture

Since CrowdStrike’s first explanations10, the problem came from “a sensor configuration update to Windows systems.” A sensor configuration update is used to tune the protection mechanisms of “Falcon,” the codename of CrowdStrike’s product. Also explained by CrowdStrike: “This configuration update triggered a logic error resulting in a system crash and blue screen (BSOD) on impacted systems.” In practice, this is the “C:\Windows\System32\drivers\CrowdStrike\CS-00000291-*.sys” files (disregarding its “.sys” extension, it does not mean the file is a driver11 – here, it is a file used by CrowdStrike’s driver) containing what CrowdStrike calls the IPC Template Instance, to be the root of the problem.

The content of a channel file, whose purpose has been explained by CrowdStrike, is a set of detection rules for different malware and to provide measure or telemetry about client’s system activity. These detection rules are enhanced to deal with a logic constructed on many artifacts provided by the collector over time. If CrowdStrike has the same capacities as its competitors, it is sometimes possible to allow rules to execute specific code execution sub-procedures written in a kind of internal “scripting” language. It may also define “resulting actions” to do when facing a given threat (telemetry report, quarantine action, …). Usually, these kinds of “rules” are interpreted inside the antivirus engine, which is the analysis service running in user-mode. One can note that in the case of an incorrect rule’s definition (as it happens with CrowdStrike), only the user-mode engine is impacted by such regular architecture.

But CrowdStrike’s architecture is different. Leaving aside unnecessary details related to CrowdStrike’s architecture, from our observations and the explanations from CrowdStrike, the CrowdStrike’s kernel driver (CSAgent.sys) interprets the content of Channel Files directly in the kernel. Conceptually, it is like implementing (at best) a regex engine (like PCRE12) or (at worst) a scripting engine interpreter directly in the kernel. Figure 2 gives a partial and generic overview of CrowdStrike’s architecture, which can be compared with the “regular” architecture in Figure 1. Note that this representation does not consider other CrowdStrike artifacts, which could follow the classical architecture of antivirus software.

Anyone can do whatever they want when implementing software. This is the freedom of the developer. But it turns out that some architectures are better than others. CrowdStrike’s architecture is not problematic by itself if and only if it can ensure that a failure will never happen in the set of detection rules used in the analysis engine (Content Interpreter). There are some technical solutions to reduce that risk, but it does not make any sense to consider this situation as safe.

As outlined by David Weston on the Microsoft Security blog13 related to the recent CrowdStrike outage and Microsoft kernel, he explains kernel drivers allow for system-wide visibility based on a set of capabilities based on system event callbacks to monitor system activity and potentially block some activities. But, if in some context, analysis or data collection may benefit from a kernel driver (for instance, for high throughput network activity), “there are many scenarios where data collection and analysis can be optimized for operation outside of kernel mode.” David Weston adds that Microsoft continues “to improve performance and provide best practices to achieve parity outside of kernel mode.” More directly, he explains that “there is a trade-off that security vendors must rationalize when it comes to kernel drivers,” between visibility capabilities and the cost of resilience, since in kernel-mode “containment and recovery capabilities are by nature constrained.”

CrowdStrike’s mitigation discussion

Implementation mitigation

What is particularly striking about CrowdStrike’s mitigations is the focus on the 21st input value. While this error can be quickly corrected by adding a few extra checks to avoid buffer overrun in a hot patch, the measure seems a little too simple. Incorrect memory access deserves to be corrected, but this type of problem is only the consequence of a more severe symptom: kernel control of detection rules. What guarantee is there that a similar case will not happen again? It is not simply that an input value is not correctly instantiated in memory, as it happened in the recent CrowdStrike outage. Still, it matters to ensure that a detection rule does not crash the machine anymore. And the only possible solution is to move the entire detection engine (Content Interpreter) into user-mode. At least, a potential remediation could have been to implement a robust system of exception handling14 for the analysis engine in the kernel driver. At a high level, the parsing procedure should have been executed within an advanced system of try/except, a mechanism perfectly supported in the Windows kernel15.

Doing so will definitively take time, not to mention the question of the origin reason(s) that drove the original architecture. Indeed, such an original design has probably been motivated for good reasons. Moving it from kernel-mode to user-mode might impact performance (but driver’s implementation best practices can perfectly keep performances) or threat-catching capacities. Regarding the last point, if it is required that the kernel driver of CrowdStrike can catch any threat before automatic services start (where the analysis could be performed), it means they try to manage kernel-mode-threats. And let us be direct: there is not much to do with a malicious driver. That’s a discussion already done: if we figure out an executed driver is malicious, it is too late. The attacker is already on the other side of this airtight hatchway. In that position, there are too many possibilities to deactivate the antivirus, corrupt it, or fake the system.

One could argue that one can exploit a vulnerability in a driver, for instance, to elevate privileges. But one more time, the problem is about exploiting that driver and exploiting it at boot time, meaning the attacker already has strong persistence capacities, the ones required by an administrator, the same ones sufficient to remove any software from the system, including security software. Of course, one can consider the case where the security software would not be removable/killable. However, this point is also well discussed, for example, by Raymond Chen from Microsoft in his blogs16.

Testing mitigation

In this incident, there is a problem with CrowdStrike’s evaluation of what is deployed on client machines. It is interesting to see how CrowdStrike proposes mitigations regarding the testing topic because they are focused on Instance Template testing. In practice, CrowdStrike claims it validates all fields in each Template Type, including tests with non-wildcard matching criteria for each field (which seems to be colliding with the mitigation number 4 from CrowdStrike, where the Content Validator was modified to “only allow wildcard matching criteria in the 21st field”). More directly, the tests were improved to test cases with additional scenarios, ensuring that every new Template Instance was tested. But that is ignoring the elephant in the room: how could a software configuration update resulting immediately (or almost immediately) in a BSOD be deployed after a testing procedure?

Indeed, CrowdStrike explains how the Instance Template evaluation has been improved, but nothing about the direct stability of their software updates. Since CrowdStrike did not explain, we can only speculate and base our analysis on our experience.

In regular Antivirus/EDR companies, when a new detection rule is forged, this one is tested. What is tested in such a procedure? First, it is tested that the rule effectively detects what it is supposed to detect (which makes sense; otherwise, the rule would be useless). Then, it is assessed that the detection rule does not detect a valid file in the system to avoid what is usually called a “false-positive.” In the end, it is tested that deploying the rule does not result in any instability for the system – for instance, a BSOD. There is no conceptual breakthrough in what is being said here, common sense and secular security best practices.

When there is a failure to deploy an update, it automatically questions its deployment assessments. To put it bluntly, there are two criteria to consider here: the quality of the tests and the execution of the tests. The analysis can be conducted by cross-referencing each of these two parameters:

- The tests are correct and executed whenever there is a deployment. But how do we explain events such as the one on July 19th? As a matter of fact, if the evaluation had succeeded, it would have detected the BSOD and prevented the deployment.

- The tests are incomplete but executed whenever is a deployment. By incomplete, we mean that no one considered BSODs as part of the measurement criteria (even though fixing BSODs is a daily routine for any kernel developers). Also, even if the channel file update had passed the test procedure, it is questionable to consider a test a success on a BSOD machine, meaning the machine crashed far before having time to check effective detection and false positives. Furthermore, incomplete also means that the testing procedure did not cover the specific conditions in which the BSOD occurred, namely a regular client machine environment. This implies that the conditions are not specific to dedicated environments and configurations.

- The tests are correct but not executed for any reason. It is pointless to speculate the reasons for such a bypass, it would be up to CrowdStrike to explain this point. However, short-circuiting internal verification procedures is not a trivial act. That would question how many people this represents in a company. Updating software on every customer’s machine (whether executable programs or database/configuration files) is probably not an authorized operation for everyone (except if we consider the hypothesis that one security analyst can update customers’ machines by him/herself). There are bound to be controls, assessments, and counterchecks. So, of course, we need to respond quickly to emerging threats. But removing the security procedures (in an IT security company!) to do this is at minimum questionable.

- The tests are non-existent and thus have not been executed. But the statistical Bernoulli process experience tells us that such an incident then would have arisen much earlier.

Whatever the explanation is, it should not be glorious. Because one thing is for sure: something has gone wrong. This “something” may be in the update procedure for the Instance Templates. CrowdStrike explains everywhere in its incident root cause analysis issue regarding the evaluation and mitigations of Instance Templates, but not so much about the environment where this evaluation is performed. The only mention says, “A stress test of the IPC Template Type with a test Template Instance was executed in our test environment, which consists of a variety of operating systems and workloads. The IPC Template Type passed the stress test and was validated for use, and a Template Instance was released to production as part of a Rapid Response Content update.” In fact, one could formulate the hypothesis (and it is only a hypothesis) that tests have been performed, but at no point, during a Channel File update, there was a test on an environment reproducing a classic Windows client (which would have been sufficient to see the BSOD).

One explanation is that the tests were (and may still be since no mitigation is mentioned) performed with a particular instance of the Core Interpreter. But this instance would be extracted from its driver context, just as a straightforward process loading the analysis engine. That would be hard to believe since such a test procedure would require extra work (kernel-mode implementation differs from user-mode one) to extract the engine in kernel-mode. And even so, what would be the point of not using directly CrowdStrike’s software as deployed on the customers’ side? With the real product, we can also measure the performance and efficiency of simulation and detection tests.

CrowdStrike mentions the fact that their product passed the Microsoft’s Windows Hardware Lab Kit (HLK) and Windows Hardware Certification Kit (HCK). However, these tests only consider the functional aspect of the software (how an antivirus driver reacts to a specific event coming from the system). They do not explicitly consider the updating of rules within the antivirus engine, even if it would have been a plus to do so to avoid the BSOD.

Quality testing is the central point in this history. And this is something pointed by the complaint of a class action lawsuit, led by pension fund Plymouth County Retirement Association17. This one argues that false and misleading statements were made to investors regarding the efficacy of the software platform. They say CrowdStrike’s technology was “validated, tested and certified” and the crash have caused “substantial legal liability and massive reputational damages” to CrowdStrike, impacting the share price. They point “this inadequate software testing created a substantial risk that an update to Falcon could cause major outages for a significant number of the Company’s customers.” Nonetheless, “CrowdStrike appears to have comprehensively limited its liability towards users of its software with the inclusion of a limitation of liability clause in its standard terms and conditions”18. Note also that Linux’s kernel-panics already happened in the past19, showing that it was not a first for CrowdStrike.

Lessons learned so far

Although this article seriously questions certain architectural choices and the implementation of CrowdStrike’s software, it also questions the organizational processes that led to such an incident. From implementation to deployment, the possibilities for error are multiple and potentially not singular in such a case. In a way, it would be interesting to question the organisation and the management to learn from that incident how to potentially improve (because it is only a hypothesis there is something to improve regarding this topic).

Software development must be rendered as secure as possible. This covers everything from specifications, choice of technologies, and initial architectural choices to deploying updates on client machines. It is a complex, challenging job that is always prone to error. I do not judge individuals here: anyone can make a mistake and of course this is independent of motivation – you do not need bad motivation to make dramatic mistakes. But we should need to learn from errors to avoid repeating them.

However, perhaps the most important lesson is the reminder that the most essential aspect of computer security is the reliability of the software programmed. If a machine crashes because of a kernel bug, then all the rest of the security suddenly does not matter. One can give all the PowerPoint presentations, talk for hours about cloud architecture, or discuss the organization of security management. Still, if the machine does not boot correctly, it is all for nothing.

Then comes the question of whether such a disaster can happen again. The answer is yes. Why should it be otherwise? Few months ago, I gave a talk explaining how to find vulnerabilities in drivers20. Antivirus software is only a subset of these, although they are massively deployed in strategic locations. And with our lives becoming ever more interconnected and using ever more devices to secure, the problem will not go away soon.

More generally, it could be relevant to question the internal processes which led to this incident. Independent of CrowdStrike’s case, there may be an over-confidence in the software developed, potentially overlooking some tests, especially those indirectly related to development such as the deployment. It is a generic questioning of how to develop and evaluate software quality (as a whole and not just on a functional aspect of a given function) that needs to be imagined and generalized. Perhaps (and this is a personal wish from me) the main contribution of this crash will be an enhancement in the mindset of the software industry.

What can we do? Well, we should stop blindly trusting software vendors. Of course, the open-source solution is an approach (though not a guarantee, as reviewing the code is not that trivial), but we must be realistic: there is a lot of close-source software out there, and we must deal with it. The approach may be to analyse the software we deploy on our machines by direct or indirect means. Of course, we cannot analyse everything. But there are not that many drivers, those which are nevertheless perfectly critical. Who let us not analyse them? Who is looking at what is inside? Who ensures they are compatible with the rest of the system on which they are deployed? How can we assess their risk? Just as we would never let anyone access our network unrestrictedly, we should not let any driver or critical software do the same.

The real problem with software quality (and therefore security) is that more control is needed, compared with today. When there is no control (or, at least, the credible threat of control), there is no respect for the rules. Should we finally apply this principle to our software? It may be time to wonder what is inside a given software before installing it. Who would install software of proven inadequate quality?. This transparency may change the approach of some to development. But one thing is for sure, there is an urgent need to do something about it.

Cheers,

Baptiste

- https://www.bsi.bund.de/EN/Service-Navi/Publikationen/Studien/SiSyPHuS_Win10/AP2/SiSyPHuS_AP2_node.html↩︎

- https://www.crowdstrike.com/blog/falcon-content-update-preliminary-post-incident-report/↩︎

- https://www.crowdstrike.com/wp-content/uploads/2024/08/Channel-File-291-Incident-Root-Cause-Analysis-08.06.2024.pdf↩︎

- https://learn.microsoft.com/en-us/windows/win32/ipc/named-pipes↩︎

- n4r1b. Dissecting the Windows Defender Driver – WdFilter.

Part 1: https://n4r1b.com/posts/2020/01/dissecting-the-windows-defender-driver-wdfilter-part-1/,

Part 2: https://n4r1b.com/posts/2020/02/dissecting-the-windows-defender-driver-wdfilter-part-2/,

Part 3: https://n4r1b.com/posts/2020/03/dissecting-the-windows-defender-driver-wdfilter-part-3/,

Part 4: https://n4r1b.com/posts/2020/04/dissecting-the-windows-defender-driver-wdfilter-part-4/↩︎ - Microsoft. AvScan File System Minifilter Driver: https://github.com/microsoft/Windows-driver-samples/tree/main/filesys/miniFilter/avscan↩︎

- https://learn.microsoft.com/en-us/windows-hardware/drivers/kernel/registering-as-a-wmi-data-provider↩︎

- https://learn.microsoft.com/en-us/windows-hardware/drivers/ddi/fltkernel/nf-fltkernel-fltregisterfilter↩︎

- https://learn.microsoft.com/en-us/windows-hardware/drivers/kernel/filtering-registry-calls↩︎

- https://www.crowdstrike.com/blog/falcon-update-for-windows-hosts-technical-details/↩︎

- A quick look inside the file ensures that it is not an MZ-PE executable. From some clients who got files with “zeros” inside, explanations are given here: https://www.crowdstrike.com/blog/tech-analysis-channel-file-may-contain-null-bytes/↩︎

- https://www.pcre.org/↩︎

- https://www.microsoft.com/en-us/security/blog/2024/07/27/windows-security-best-practices-for-integrating-and-managing-security-tools/#why-do-security-solutions-leverage-kernel-drivers↩︎

- https://learn.microsoft.com/en-us/windows/win32/debug/about-structured-exception-handling↩︎

- https://learn.microsoft.com/en-us/windows/win32/debug/about-structured-exception-handling↩︎

- https://devblogs.microsoft.com/oldnewthing/20130620-00/?p=4033↩︎

- https://www.bernlieb.com/wp-content/uploads/2024/07/20240731-91ea4496ec7c.pdf↩︎

- https://www.ogier.com/news-and-insights/insights/perspective-on-the-recent-crowdstrike-outage-implications-for-insurance-and-cyber-resilience/↩︎

- https://www.theregister.com/2024/07/21/crowdstrike_linux_crashes_restoration_tools/↩︎

- https://hek.si/predavanja#371↩︎